This little triptych makes me happy... I love wearing these vivid purple socks, but they're nearly five years old. Luckily I still had the yarn leftover from making them, and the soles of handmade socks consist of "stockinette stitch" which is easy to darn. Done! I plan to get at least another five years out of these.

Sunday, December 14, 2008

Monday, December 08, 2008

The HTTPMail proposal I mentioned here now has a discussion list. I've already received a few good comments, building a list of changes to make when I go back into editing the draft. However, I'm in no hurry to do so. People are still discussing1 what they think it's for; which use cases they believe in or not, and which use cases I forgot to mention. That's a good discussion to have before nailing down what features it has to have.

[1] discussion so far on the general Apps Discuss mailing list; discussion really hasn't started up on the special purpose list yet.

[1] discussion so far on the general Apps Discuss mailing list; discussion really hasn't started up on the special purpose list yet.

Friday, December 05, 2008

This is pretty exciting news from Amazon: they're launching a service to freely host public data sets. Part of building openfindings.org was about that problem, but Amazon can do a better job of storage than a smaller organization can. Problems still to be solved:

- finding the right dataset -- somebody needs to do data-focused searches on terms like "lyme disease" or "lung cancer mortality", and this probably needs a bit of ontology work

- automatically generated visualizations appropriate to well-known kinds of data

- Wikipedia-style annotation, comments, highlighting: people not only mining and analyzing the dataset in private, but also in public and benefiting from each others work

Monday, December 01, 2008

I'm following up on my last rant on multiple transports. It's a topic with nuance, but I still think it's a worthwhile caution to avoid multiple transports in non-realtime standard protocols.

Yngve talked about multiple transports used by Opera Mini for cell phone Web browsing. This is in the context of what appears to be a proprietary protocol; at least it's not standardized. Opera Mini talks to a transcoder run by Opera, so interoperability is mostly handled by not having multiple vendors of the client or the server. Skype was another example, but again Skype does not use a standard. More generally, Yngve talked about TCP-based applications that need to bypass firewalls and can use HTTP as a tunnel. Yngve, you're right about the general world of protocols, but what do you think about broadly standardized protocols?

Ralph and Joe pointed out that there are multiple implementations of BOSH, the HTTP binding for XMPP. This is interesting; I was aware of the proposal but had no idea how widely adopted it is. If it really becomes universally supported in servers, then we could write any new application on top of XMPP and it will work over HTTP as well as natively over TCP. Now that's interoperability!

Yngve talked about multiple transports used by Opera Mini for cell phone Web browsing. This is in the context of what appears to be a proprietary protocol; at least it's not standardized. Opera Mini talks to a transcoder run by Opera, so interoperability is mostly handled by not having multiple vendors of the client or the server. Skype was another example, but again Skype does not use a standard. More generally, Yngve talked about TCP-based applications that need to bypass firewalls and can use HTTP as a tunnel. Yngve, you're right about the general world of protocols, but what do you think about broadly standardized protocols?

Ralph and Joe pointed out that there are multiple implementations of BOSH, the HTTP binding for XMPP. This is interesting; I was aware of the proposal but had no idea how widely adopted it is. If it really becomes universally supported in servers, then we could write any new application on top of XMPP and it will work over HTTP as well as natively over TCP. Now that's interoperability!

Monday, November 24, 2008

Herewith, a rant: multiple transport bindings considered harmful.

I still hear this and it's getting increasingly annoying. People consider it a good thing for a standard to be able to run over HTTP, BEEP and something else. Has this ever proven to be a good idea? Layering is good for other reasons, but not because it gives implementors a choice that leads to interoperability failure in many cases.

Is it a failure on the part of the designer to understand the usage characteristics of their protocol, and successfully map that onto TCP (connection-oriented), HTTP (stateless respond-and-forget) or something else?

SOAP is supposed to be transport-independent and offer choice, but as I overheard last week, there's a reason they call it web services. And the ultimate in multiple-transport wankery: I once heard somebody propose a schema which they said would run over SOAP or HTTP.

Are there use cases I'm unaware of, where this has been a really good thing for some standard?

I still hear this and it's getting increasingly annoying. People consider it a good thing for a standard to be able to run over HTTP, BEEP and something else. Has this ever proven to be a good idea? Layering is good for other reasons, but not because it gives implementors a choice that leads to interoperability failure in many cases.

Is it a failure on the part of the designer to understand the usage characteristics of their protocol, and successfully map that onto TCP (connection-oriented), HTTP (stateless respond-and-forget) or something else?

SOAP is supposed to be transport-independent and offer choice, but as I overheard last week, there's a reason they call it web services. And the ultimate in multiple-transport wankery: I once heard somebody propose a schema which they said would run over SOAP or HTTP.

Are there use cases I'm unaware of, where this has been a really good thing for some standard?

Wednesday, November 12, 2008

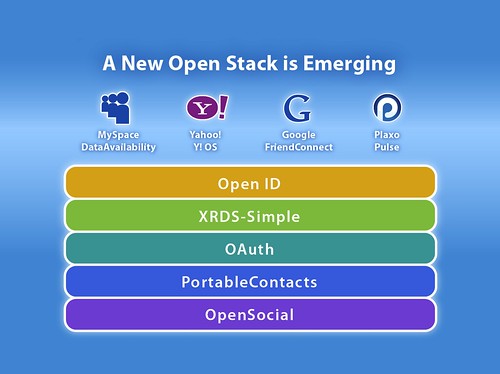

I'd like to be able to explain the important confluence of several ad-hoc Web standards to various people, so I paid attention Monday at IIW. This is the diagram I saw:

I can't call this a stack. I just can't. It's not a protocol stack or an API stack or a library stack. The best I can say is that "Some people call it a stack". There's no layering relationship between these things; there's not even a sequence or an order.

PortableContacts extends Open Social and the rest are independent proposals that work well together. It's a suite, a cluster or a collection of protocols/APIs. Here's a first stab at a moderately simple diagram that does not mislead:

Comments?

I can't call this a stack. I just can't. It's not a protocol stack or an API stack or a library stack. The best I can say is that "Some people call it a stack". There's no layering relationship between these things; there's not even a sequence or an order.

PortableContacts extends Open Social and the rest are independent proposals that work well together. It's a suite, a cluster or a collection of protocols/APIs. Here's a first stab at a moderately simple diagram that does not mislead:

Comments?

Sunday, November 09, 2008

The idea of using native HTTP resources to RESTfully access an email store is not only an old idea, it's been implemented many times. Some Web mail architectures are even somewhat RESTful although the purest implementations are not themselves Web UIs -- these RESTful email interfaces have typically been built to support Web UI frontends in a classic tiered architecture.

I have been talking about this for so long that it seems self-explanatory. Emails can be HTTP resources with persistent URLs and machine-readable representations. Mailboxes can also be HTTP resources with persistent URLs and machine-readable listings of contents, we just have to agree how to represent those listings. Here's my proposal.

Atom feeds is my choice for organizing those listings. I think it's worth explaining the two overriding reasons why.

I have been talking about this for so long that it seems self-explanatory. Emails can be HTTP resources with persistent URLs and machine-readable representations. Mailboxes can also be HTTP resources with persistent URLs and machine-readable listings of contents, we just have to agree how to represent those listings. Here's my proposal.

Atom feeds is my choice for organizing those listings. I think it's worth explaining the two overriding reasons why.

- Atom allows clients to just GET a representation of a feed, and the feed can contain an arbitrarily long list of items, but paged according to the server's needs. This is a great allocation of responsibility, making client logic simple and putting server performance in the server's hands.

- The default model for feeds is that the same object can be in more than one feed. This is very important for the usability of email going forward. I know a few people who organize their mail into strict hierarchical collections, typically using IMAP, and find old email by jumping to the right place in the hierarchy -- but I know far more who rely on giant inboxes, saved searches, flagged email or tagging. The default model for feeds directly accomodates all those usage models.

Friday, October 17, 2008

There's lots of ways to amuse even a toddler under 2 with a computer. Browse through cuteoverload or icanhascheezburger. My favourite is to find a Flickr group like Animal Parent and Offspring and browse through saying what kind of animal and baby we're looking at.

On Youtube, one of the earliest interesting things, to a baby, is videos of other babies laughing or babbling, and other parents have uploaded a bunch of those. Of course there's more cute animal videos. Elsewhere, there's kids music videos by TMBG and others.

I'm sure there's lots more but I'm not usually so strapped for ideas that I turn to a computer. I have a sore throat and don't want to get up from the couch!

On Youtube, one of the earliest interesting things, to a baby, is videos of other babies laughing or babbling, and other parents have uploaded a bunch of those. Of course there's more cute animal videos. Elsewhere, there's kids music videos by TMBG and others.

I'm sure there's lots more but I'm not usually so strapped for ideas that I turn to a computer. I have a sore throat and don't want to get up from the couch!

Monday, October 06, 2008

Two IETF tidbits to pass on.

1. An OAuth BOF was approved, and OAuth was submitted as an IETF I-D as fodder for that BOF. I'm excited about this; we at the IETF have been rather floundering around, without much consensus or sustained progress. Two years ago (already!) at the WAE BoF we identified some possible steps forward but there was still much disagreement over basic approaches, both technical and architectural (integrate SASL into HTTP, make requirements for TLS Client auth in HTTP, invent something new for HTTP). Since OAuth is already implemented in several places, perhaps this will leapfrog us past the open-ended "what shall we build" discussions.

2. ALTO is a proposed WG for "Application Layer Traffic Optimization": focusing on P2P file sharing, how can the network provide information to peers to help them pick peers in a way that impacts other users the least? The ALTO charter was sent out for IETF-wide review, and I'm optimistic about its formation as a WG. I've requested a BOF meeting slot, but hoping to turn that into a WG meeting slot.

1. An OAuth BOF was approved, and OAuth was submitted as an IETF I-D as fodder for that BOF. I'm excited about this; we at the IETF have been rather floundering around, without much consensus or sustained progress. Two years ago (already!) at the WAE BoF we identified some possible steps forward but there was still much disagreement over basic approaches, both technical and architectural (integrate SASL into HTTP, make requirements for TLS Client auth in HTTP, invent something new for HTTP). Since OAuth is already implemented in several places, perhaps this will leapfrog us past the open-ended "what shall we build" discussions.

2. ALTO is a proposed WG for "Application Layer Traffic Optimization": focusing on P2P file sharing, how can the network provide information to peers to help them pick peers in a way that impacts other users the least? The ALTO charter was sent out for IETF-wide review, and I'm optimistic about its formation as a WG. I've requested a BOF meeting slot, but hoping to turn that into a WG meeting slot.

Tuesday, September 30, 2008

This was roughly my bike route today to get to a meeting in Redwood City and back. Apparently about 17 miles, on top of my 6 mile bike between home and the office.

View Larger Map

View Larger Map

One of the best articles on DRM I've read in a while appears on Penny Arcade, and contains the words "DRM takes a big poo on your best customers". Actually, the full sentence is worth including:

Yeah, that's right, punish your friends and give everything away to your enemies. As artists and creators, you do deserve to make money. Perhaps there are other ways than punitive DRM, however.

I'm not into protesting games that use DRM for protest's sake, although I did avoid buying Spore because of its license checking. I just happen to like games that I can play when I like and pay for when I like. I've given money to Puzzle Pirates, which the author of the article is associated with, because the game let me have fun first before paying. Ikariam is similar but taunted me too much with the for-pay goodies so I found that pressure off-putting. Kingdom of Loathing has the best laid-back attitude towards paying and playing. Not only can I take a break without getting punished, they treat my contributions as valued donations, so I feel good when I toss them some meat.

DRM takes a big poo on your best customers -- the ones who've given you money -- whilst doing nothing practical to prevent others from 'stealing' your precious content juices.

Yeah, that's right, punish your friends and give everything away to your enemies. As artists and creators, you do deserve to make money. Perhaps there are other ways than punitive DRM, however.

I'm not into protesting games that use DRM for protest's sake, although I did avoid buying Spore because of its license checking. I just happen to like games that I can play when I like and pay for when I like. I've given money to Puzzle Pirates, which the author of the article is associated with, because the game let me have fun first before paying. Ikariam is similar but taunted me too much with the for-pay goodies so I found that pressure off-putting. Kingdom of Loathing has the best laid-back attitude towards paying and playing. Not only can I take a break without getting punished, they treat my contributions as valued donations, so I feel good when I toss them some meat.

Thursday, September 25, 2008

I've been asked a lot about use of SOAP in IETF standards. Most of the time, I think it's overkill and poor for interoperability, because it has a bunch of features that probably aren't needed, and if they are used, will not work unless everybody uses them the same way.

While using HTTP directly suffers from the same problem, at least the problem is not doubled by using SOAP over HTTP. And HTTP benefits from fantastic tool support and connectivity. So without further debate about whether HTTP itself is a bad or a good thing to use, here's how to use it in a standard.

---

Ok, I wrote this quickly to get it out and I'd love feedback. There are probably other recipes; this is the one I use. Exceptions exist. Void where prohibited.

While using HTTP directly suffers from the same problem, at least the problem is not doubled by using SOAP over HTTP. And HTTP benefits from fantastic tool support and connectivity. So without further debate about whether HTTP itself is a bad or a good thing to use, here's how to use it in a standard.

- Define your objects, these will be HTTP resources. These are the objects people operate on. In Atom, these objects are entries, feeds and service documents. In CalDAV, objects are calendars and events. In a protocol for transferring karma between people, resources might represent individual karma accounts.

If you can't think of objects but only of services, you may still be able to use HTTP POST to send requests to the service handler and get a response. I guess you're treating your service handler as a kind of object but it's only minimally RESTful to do this. - Don't bother defining the URLs to resources. They'll be HTTP URLs of some kind. Let servers choose and clients discover.

- Define operations on your objects/resources. To create a new one, you should be using PUT (if the client can reasonably construct a new URL that the server will find acceptable) or POST if the server will pick a URL. To remove an object, use HTTP DELETE. To update an object by replacing its content, use PUT.

Some commonoperations are defined in other HTTP extensions already. To update an object by making a change to it, use PATCH. To move or copy an object, use MOVE or COPY. - Define how clients will find resources. E.g. Atom has service documents which locate feeds, and feed documents which locate blog entries. Because of Atom's strongly document-oriented approach, this means Atom uses GET requests for navigation and resource discover. CalDAV, by contrast, uses WebDAV collections for containers, therefore uses PROPFIND for the same purpose.

You may find in this part of the exercise that you've invented new objects to help navigation and resource discovery work well. Reexamine your object model. - If you need permissions, use the WebDAV ACL model if possible. Hold your nose if you need to. It's better than building one yourself. Leave permissions out entirely if you can wait a revision.

- Figure out if you've got any magic or use cases that aren't handled. E.g. CalDAV needed some magic to query calendars to find out "what events occur or reoccur this week". That wasn't solved with a RESTful PROPFIND request, so CalDAV defined some magic and used the REPORT method to carry the request for magic. Define your own new method for this one if you're dying to exert some creativity.

- Figure out the details. There are a fair amount. For example, define whether resources have fixed MIME types; whether caching is appropriate, whether ETags are required. It's probably a good exercise to walk through the section headings of the entire HTTP spec and say "Is this required to support, required not to use, or does it interact with our new protocol in some funny way."

---

Ok, I wrote this quickly to get it out and I'd love feedback. There are probably other recipes; this is the one I use. Exceptions exist. Void where prohibited.

Monday, September 08, 2008

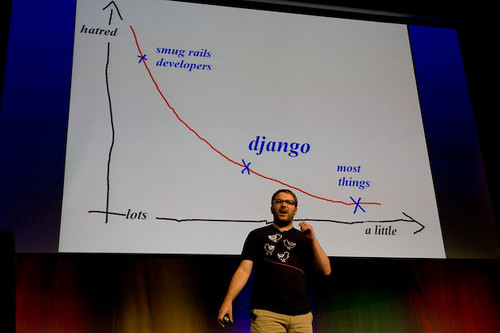

DjangoCon 1.0 was great, and now I've got the T-shirt, as shown at left (one of 100 printed -- in a real female size, no less, thanks to Leah.) The photo is from my lightning talk wherein I introduced myself and gave 10 reasons why people who use Django might be interested in IETF and how I could help. Also I'm tickled because the photo was taken by Simon Willison, whose blog I've been reading ever since I started using Django.

It's always nice to meet people with common interests, people I've gotten some awareness of online, but it was especially lovely this time.

As Cal Henderson (shown left, pic by webmonkey_photos on flickr) pretty much said in the funny roast-style keynote entitled "Why I Hate Django", the core Django people are just too damn nice.

As Cal Henderson (shown left, pic by webmonkey_photos on flickr) pretty much said in the funny roast-style keynote entitled "Why I Hate Django", the core Django people are just too damn nice. I also had moment after moment of future shock, just thinking about how many more tools we have for self-organizing as groups of people. Blogs, forums, voting widgets, comment fields, shared calendars and shared docs, collaborative bookmarking and tagging. Yes, none of these are new to Django but Django makes sites with these features very easy to create. I got started on dial-in text-only bulletin boards, people -- we're talking 28 kb modem -- consciously moving my reference point further back than it normally is. And on the other side of the coin, Django is such a useful project because of the online collaboration of loosely connected individuals. That's some heavy bootstrapping there.

Wednesday, August 27, 2008

I am going to attend the first DjangoCon in a couple weeks. Sorry if you have heard about this too late, all the spots are taken. The Django 1.0 release party is still open, however.

Django has been great to use. It's a huge leap from how I used to have to do data-backed Web sites. It does the same things Rails does but for a Python environment, which I prefer.

I look forward to meeting other django-heads for the first time since I started using it. I'm sure I can learn a lot.

Django has been great to use. It's a huge leap from how I used to have to do data-backed Web sites. It does the same things Rails does but for a Python environment, which I prefer.

I look forward to meeting other django-heads for the first time since I started using it. I'm sure I can learn a lot.

Wednesday, August 20, 2008

Warning: Knitting content has returned, because I need a place to post this picture.

Claudia asked for solutions to keeping sock knitting on the sock needles while carrying it around, and my favorite solution is a little fabric pocket. I measured the fabric an inch wider than the length of the sock needles in question, on the bias so that there would be stretch. Then the pocket is sewn up as shown in the diagram, with extra seams to keep the sock needles from popping out. The knitting hangs out the opening of the pocket, with the flap to fold over (probably optional).

I did my own two pockets in cotton and silk, finishing the raw edgings with a little rolled hem. A super fast hemless solution would be to use a small square of slightly stretchy fleece fabric, which needs no edge finishing.

The reason this works as well as the point protectors is that the hole on the pocket is smaller than the needles are, so it holds the knitting -- remember, the knitting remains mostly outside of the pocket -- narrowly on the center of the needles. It's the same principle as the sock knitting tubes sold here but cheaper.

Claudia asked for solutions to keeping sock knitting on the sock needles while carrying it around, and my favorite solution is a little fabric pocket. I measured the fabric an inch wider than the length of the sock needles in question, on the bias so that there would be stretch. Then the pocket is sewn up as shown in the diagram, with extra seams to keep the sock needles from popping out. The knitting hangs out the opening of the pocket, with the flap to fold over (probably optional).

I did my own two pockets in cotton and silk, finishing the raw edgings with a little rolled hem. A super fast hemless solution would be to use a small square of slightly stretchy fleece fabric, which needs no edge finishing.

The reason this works as well as the point protectors is that the hole on the pocket is smaller than the needles are, so it holds the knitting -- remember, the knitting remains mostly outside of the pocket -- narrowly on the center of the needles. It's the same principle as the sock knitting tubes sold here but cheaper.

Tuesday, August 19, 2008

Google announced support for the CalDAV standard, and explained how to view a Google calendar in Apple's iCal client. There are a few glitches but it's a developer-focused release and they've solicited bug reports.

I would be very interested to know how their WebDAV logic (underlying CalDAV) plays with their Atom logic (underlying the GData API). I'm sure Google Calendar is not the only implementation to support both protocols to view and modify the same data, and there will be more.

I would be very interested to know how their WebDAV logic (underlying CalDAV) plays with their Atom logic (underlying the GData API). I'm sure Google Calendar is not the only implementation to support both protocols to view and modify the same data, and there will be more.

Wednesday, June 25, 2008

Biking around and trailing the toddler is all very well, until you hurt your lower back pushing those pedals, and then have to pick up said toddler. And when I say "you" here, I mean "me" (don't you love the vagueness of the impersonal second person, or "generic you").

The resident expert advised me to pedal faster at lower gears, and to spin the pedals around rather than push them down. Apparently RPMs of more than one per second are common for real cyclists. I started saying "Left, right, left right" to myself to try to get a feel for this rhythm, and it seemed reasonable until I actually got on the bike again and tried to pedal that fast. It feels too fast! Will definitely take some getting used to.

While this may all be very logical and reasonable, it's evidently not what I'm used to. When we were kids we used to have durable, stiff bikes and we'd stand up on the pedals alternately to get moving, putting our full weight on each slow stroke. Besides, the seats were torn up anyway by mistreatment, so why even sit on the seat. I think when I was thirteen, or maybe even twelve, my parents got me a full-size men's mountain bike, figuring it was the last bike they'd buy me before I moved out. It lasted me through university too as I recall. Of course it didn't fit me -- frames for men are too long for most female torsos -- but we didn't know any better about that either.

The resident expert advised me to pedal faster at lower gears, and to spin the pedals around rather than push them down. Apparently RPMs of more than one per second are common for real cyclists. I started saying "Left, right, left right" to myself to try to get a feel for this rhythm, and it seemed reasonable until I actually got on the bike again and tried to pedal that fast. It feels too fast! Will definitely take some getting used to.

While this may all be very logical and reasonable, it's evidently not what I'm used to. When we were kids we used to have durable, stiff bikes and we'd stand up on the pedals alternately to get moving, putting our full weight on each slow stroke. Besides, the seats were torn up anyway by mistreatment, so why even sit on the seat. I think when I was thirteen, or maybe even twelve, my parents got me a full-size men's mountain bike, figuring it was the last bike they'd buy me before I moved out. It lasted me through university too as I recall. Of course it didn't fit me -- frames for men are too long for most female torsos -- but we didn't know any better about that either.

Monday, June 23, 2008

I recently scanned through Flow, by Mihàly Csíkszentmihályi, a book I first read a decade ago. It didn't improve on rereading -- it's not a bad book, but it has the kind of overblown promises I've come to recognize in a certain category of books by obsessed people. Greater world peace, less illness, better personal finances and fewer unwed teen mothers would result if more people learned to experience more flow. Another book in this category is The Promise of Sleep, by Dr. William C. Dement: if only more people got better sleep we'd see better health, less unemployment and many fewer traffic accidents. Any others?

My husband has been ranting about another category of books: books about a particular invention that "changed the world". The smaller the subject the funnier these are, and these are easy to find with an Amazon search:

My husband has been ranting about another category of books: books about a particular invention that "changed the world". The smaller the subject the funnier these are, and these are easy to find with an Amazon search:

- The Machine That Changed the World -- about "lean manufacturing" as introduced by Toyota, basically a PR exercise for Toyota according to reviews.

- Rome 1960: The Olympics that Changed the World

- The Night of the Long Knives: Forty-Eight Hours That Changed the History of the World

- Nixon and Mao: The Week That Changed the World

- The Map That Changed the World: William Smith and the Birth of Modern Geology

- The Gun that Changed the World -- about the Kalashnikov

- Mersey the River That Changed the World

- The Fibre that Changed the World: The Cotton Industry in International Perspective, 1600-1990s

- The Box That Changed the World: Fifty Years of Container Shipping - An Illustrated History

- Tea: The Drink That Changed the World

- Banana: The Fate of the Fruit That Changed the World

- Cod: A Biography of the Fish That Changed the World

Saturday, June 21, 2008

I have a yoga rant today. Why are yoga classes so seldom both strong and safe? By "strong" I mean including poses that take strength to hold, and that are held for five or more long breaths, such that the class overall makes one sweat and improves muscle tone. Load-bearing exercise is important for women of all ages for late-life bone strength, and men seem to like these types of classes better because they include some poses that men are good at.

By "safe" I mean that dangerous poses, ones that can harm the wrists or back or knees, are not as frequent and come with careful instruction and warning, and the teacher explains how to do the pose safely and also explains who should not do the pose. Some of the things I hear from yoga teachers I consider "safe":

Instead, the normal tendency when teachers focus on yoga that doesn't hurt, is to make the whole class easier; while the tendency when building a class for advanced or strong students is to do dangerous poses without teaching how to do them safely.

Anyway, I do know a teacher, currently teaching, who excels at combining strength and safety, although I don't have a schedule that allows me to take her classes right now.

By "safe" I mean that dangerous poses, ones that can harm the wrists or back or knees, are not as frequent and come with careful instruction and warning, and the teacher explains how to do the pose safely and also explains who should not do the pose. Some of the things I hear from yoga teachers I consider "safe":

- Push the base of the fingers down into the mat in poses that put weight on the wrist; this engages the muscles of the hand to protect the wrist.

- Remember to flex the foot in poses that open the hip by using the whole leg as a lever (e.g. the "knee to ankle" pose). Again this activates the muscles that hold the joint solid, rather than stress the ligaments.

- "If this hurts in this way, stop."

Instead, the normal tendency when teachers focus on yoga that doesn't hurt, is to make the whole class easier; while the tendency when building a class for advanced or strong students is to do dangerous poses without teaching how to do them safely.

Anyway, I do know a teacher, currently teaching, who excels at combining strength and safety, although I don't have a schedule that allows me to take her classes right now.

Wednesday, June 18, 2008

I am not experiencing flow doing Django Web form programming. Don't get me wrong, it's powerful and good, but there is something seriously messing with my ability to keep working. As usual, I need to look up Python and Django and HTML forms details every so often, but this is normal and I've been able to maintain focus doing other kinds of programming.

To build Web forms, I create a new HTML page and see that it loads, add a form element and a form field, see if it shows up, add a submit button, test that it works without being wired up, wire it up to a form submission URL and write a dummy handler, see that the dummy handler is called, replace the dummy handler code with real handler code. Each of these steps takes only a minute or two and after many of them I find I have to stop working rather than go on to the next step. It's worst when I have to figure out "what next" when I'm done with one whole form, at least for the time being. Perhaps it's the constant swapping between deciding "What am I doing" and "How do I do what I decided to do".

To build Web forms, I create a new HTML page and see that it loads, add a form element and a form field, see if it shows up, add a submit button, test that it works without being wired up, wire it up to a form submission URL and write a dummy handler, see that the dummy handler is called, replace the dummy handler code with real handler code. Each of these steps takes only a minute or two and after many of them I find I have to stop working rather than go on to the next step. It's worst when I have to figure out "what next" when I'm done with one whole form, at least for the time being. Perhaps it's the constant swapping between deciding "What am I doing" and "How do I do what I decided to do".

Monday, June 09, 2008

This was my route and itinerary by bike last Friday:

I biked three miles to the Palo Alto train station, caught an earlier train than I intended, and arrived 45 minutes early for my 10am meeting at point B. I left there just before 11 am and saw two bridges and much waterfront on my way to point C, where I was 30 minutes early for my noon meeting.

I left C at 1:30 and was 20 minutes early for my 2:30 appointment at point D. Finally I left there at 3:30 and had a last appointment near A before biking back to Caltrain. This SF loop is 12.5 miles according to Google, and I optimized it to have very little hill-climbing but this is SF folks. There were some hills.

The caltrain ride home was very much slowed by a pedestrian accident past Palo Alto, so I got off the train at the unscheduled Menlo Park stop and biked home: 4 miles.

A total of 19.5 miles on bike, all in a skirt with my demo laptop on my back. I loved it!!

I biked three miles to the Palo Alto train station, caught an earlier train than I intended, and arrived 45 minutes early for my 10am meeting at point B. I left there just before 11 am and saw two bridges and much waterfront on my way to point C, where I was 30 minutes early for my noon meeting.

I left C at 1:30 and was 20 minutes early for my 2:30 appointment at point D. Finally I left there at 3:30 and had a last appointment near A before biking back to Caltrain. This SF loop is 12.5 miles according to Google, and I optimized it to have very little hill-climbing but this is SF folks. There were some hills.

The caltrain ride home was very much slowed by a pedestrian accident past Palo Alto, so I got off the train at the unscheduled Menlo Park stop and biked home: 4 miles.

A total of 19.5 miles on bike, all in a skirt with my demo laptop on my back. I loved it!!

Wednesday, June 04, 2008

Tuesday, June 03, 2008

I visited the Asian Art museum of San Francisco a few years ago with a good friend. We were struck by all the buddhist iconography embodied in the hair of painted gods: peaceful gods had softly flowing blue hair, while wrathful deities had fiercely curling fiery red hair. Like Jasmin:

After seeing all these fierce hairdos, my friend and I immediately went home and dyed our hair bright red. But Jasmin really rocks that look!

UPDATED: I didn't mean to imply this photograph is one I took, but I forgot to give credit for it. 'gretch_dragon' took the photo.

After seeing all these fierce hairdos, my friend and I immediately went home and dyed our hair bright red. But Jasmin really rocks that look!

UPDATED: I didn't mean to imply this photograph is one I took, but I forgot to give credit for it. 'gretch_dragon' took the photo.

Monday, June 02, 2008

Some news related to my role as an Applications Area Director, for a change. Last week was the P2P Workshop organized by Jon Peterson and Cullen Jennings. I didn't take any notes so I won't have many names to drop, but I learned a lot. In the morning we heard talks from operations guys at Comcast and by Stanislav Shalunov who works on one of the BitTorrent clients. The basic summary of each of the talks was that despite their differences they'd like to work together and with the IETF or for the good of the Internet. Comcast talked about their commitment to net neutrality and the costs/challenges of providing cable service -- neat stuff about how upload bandwidth is completely separate from download bandwidth, and BitTorrent is unusual among applications in using more upload bandwidth than most. Stanislav talked about all the logic they have in the client he works on to be careful about overloading the network despite having very little information available on how to ration network usage.

In the afternoon, I recall a talk on "Localization". I'm used to this meaning to translate software into another language, but in this case the meaning was to find a server that's "local" in network terms before attempting to do something. Leslie Daigle and Henning Shulzrinne also gave talks.

Some of the takeaways for the Applications area could be:

- Documenting the BitTorrent protocol in a stable document

- Standard on how to find an appropriate mirror or location for a resource that's available on multiple servers -- this is re-implemented all the time in the wild, with different confusing HTML interfaces on each implementation

- Standard on how to find a local server -- this could be a subproblem of the basic service discovery problem (given a particular domain), or it could be more sophisticated (not just one domain but based instead on network topology)

- More ways to find out network information -- this could include information on bandwidth available, bandwidth quota, local servers, caches etc.

I don't know exactly how followup from this meeting may turn into Internet-Drafts, WGs or other, so that's all the information I have for now and no predictions of whether people will actually volunteer to do any of the above. But even as is, I thought the workshop was useful for bringing people closer to the same page.

In the afternoon, I recall a talk on "Localization". I'm used to this meaning to translate software into another language, but in this case the meaning was to find a server that's "local" in network terms before attempting to do something. Leslie Daigle and Henning Shulzrinne also gave talks.

Some of the takeaways for the Applications area could be:

- Documenting the BitTorrent protocol in a stable document

- Standard on how to find an appropriate mirror or location for a resource that's available on multiple servers -- this is re-implemented all the time in the wild, with different confusing HTML interfaces on each implementation

- Standard on how to find a local server -- this could be a subproblem of the basic service discovery problem (given a particular domain), or it could be more sophisticated (not just one domain but based instead on network topology)

- More ways to find out network information -- this could include information on bandwidth available, bandwidth quota, local servers, caches etc.

I don't know exactly how followup from this meeting may turn into Internet-Drafts, WGs or other, so that's all the information I have for now and no predictions of whether people will actually volunteer to do any of the above. But even as is, I thought the workshop was useful for bringing people closer to the same page.

Wednesday, May 21, 2008

About a year ago, I worked with Jeff Lindsay for a couple months. He worked on the demo for the project I'm still working on, and he set me up with a source code repository, wiki and ticket system: all an integrated part of his devjavu.com work. Since I hate maintaining these development services but must have them, it's a perfect solution for me. I recommend it.

BTW, today Jeff showed me how to give anonymous permissions to the source code repository, so I did. Here's what I'm working on :)

BTW, today Jeff showed me how to give anonymous permissions to the source code repository, so I did. Here's what I'm working on :)

Thursday, May 15, 2008

I've been writing import tools (not quite screen scraping, but close). Today I used those import tools to grab a bunch of CDC data on causes of death. This can get a little depressing at times. Sometimes I notice a large number of people died of something I didn't even realize was a cause of death. Look at the skew to age in death attributed directly to dementia:

Other times, it's nice to note that hardly anybody dies of something (e.g. cannabis usage, such low numbers I didn't bother importing). Here's deaths due to all mental and behavioural disorders from use of psychoactive substance (and nearly all of them are alcohol):

Other times, it's nice to note that hardly anybody dies of something (e.g. cannabis usage, such low numbers I didn't bother importing). Here's deaths due to all mental and behavioural disorders from use of psychoactive substance (and nearly all of them are alcohol):

Sunday, May 11, 2008

I took Zack Arias' OneLight Workshop on Friday. It was, on the whole, awesome, as many others have reported, with the personal exception explained below.

We spent several hours reviewing the science and the analysis of light, shutter speed and aperture. Being an engineer, I can pretty much memorize this level of technical stuff when it's presented as clearly as Zack does: words in my eyes (slides), words in my ears (him speaking), a couple diagrams, quite a few examples and definite humour. So far so good.

The next part of the workshop I was totally unprepared for. This was the part where Zack set up a lighting situation and a model, explained the setup, and then let everybody in turn use their own camera (but his light) to shoot the model. I was a nervous wreck and confused and embarrassed and disfunctional.

Half of my problem -- and both halves were my problem, not the workshop's -- was that I didn't know my camera well enough. I thought I knew how to shoot in manual mode, but while nervously fiddling to get that to actually work, I screwed up several other settings on my camera. It took me another hour after that to recover proper focus, because I could not relax and look and see what was wrong. I've used other fully manual cameras before, and I've shot with the Rebel in aperture priority or shutter priority mode, but I needed to know *this* camera well enough to be able to focus on other things besides the camera's button's and dials and screen. Ouch.

The other half of my problem was sudden incapacitating social awkwardness. I was completely bashful about working with live, volunteer models I'd never met before. I was intimidated by going before and after professional photographers who knew their cameras and who were joking comfortably with the models. I was embarrassed at wasting everybody's time, the models and the other photographers waiting to use the same light setup. I was too wrapped up in my own awkwardness to ask the models to turn their head, or move closer to the light, or up or down. Stupid, huh? They were lovely, friendly, patient models but I was completely flustered. I think I babbled incoherent apologies at them at the end of each "shoot".

As this part of the workshop continued into our third and fourth light setups, or about two hours after quietly flipping out, I started pulling things together. Several people definitely tried to be helpful and were in fact helpful (thanks Tara and of course Zack) but I was at that point where I was too self-conscious to be very effective at accepting help. I've seen other people in that position but haven't experienced it myself recently. It's a humbling reminder for when I'm in "helper" role of saying "Oh, just do it this way". I got my focus straightened out. I started adjusting my aperture in the right direction instead of consistently doing it in the wrong direction. I got to that moment where I decided to try to alter a shot by taking down the light on the background compared to the model. I worked it through: I remembered I had to increase the shutter speed to pick up less ambient light, while maintaining an aperture that picked up the flash on the subject at the same brightness. The result, while in no way a good photo -- I mean, not at all -- was at least the product of an intention that I formulated to make use of what I'd learned.

It all went uphill from there. I started to be able to make a plan and imagine a look and adjust what part of the background was in the photos and how much light it had. I really didn't take a lot of good photos but increasingly I was able to make changes that I thought of. I took notes of what I was doing to cement the principles.

At the end, I finally got comfortable photographing one of the photographers. This was in the part of the workshop where we split into groups and set up our own lighting. Tara setup a shoot-through umbrella near a wood fence, and Bärbel was the fellow photographer who sat for my shooting, as I sat through hers:

I was now comfortable enough to ask Bärbel to move away from the fence, to give more separation between the light on her and the light on the fence, thus more control at dialing one up and one down. I was comfortable enough to move the umbrella/flash stand closer to her to her. I was still trying to be quick and didn't take a zillion shots with different facial expressions or different compositions, but since I was there to learn the lighting part that was OK. And I got this shot, and I was happy.

Thanks to our hosts who provided excellent food and a location, and the models and fellow photographers and Zack. I was too dorky to act normally but if you happen to stumble on this I want you to know I enjoyed myself and learned a lot.

We spent several hours reviewing the science and the analysis of light, shutter speed and aperture. Being an engineer, I can pretty much memorize this level of technical stuff when it's presented as clearly as Zack does: words in my eyes (slides), words in my ears (him speaking), a couple diagrams, quite a few examples and definite humour. So far so good.

The next part of the workshop I was totally unprepared for. This was the part where Zack set up a lighting situation and a model, explained the setup, and then let everybody in turn use their own camera (but his light) to shoot the model. I was a nervous wreck and confused and embarrassed and disfunctional.

Half of my problem -- and both halves were my problem, not the workshop's -- was that I didn't know my camera well enough. I thought I knew how to shoot in manual mode, but while nervously fiddling to get that to actually work, I screwed up several other settings on my camera. It took me another hour after that to recover proper focus, because I could not relax and look and see what was wrong. I've used other fully manual cameras before, and I've shot with the Rebel in aperture priority or shutter priority mode, but I needed to know *this* camera well enough to be able to focus on other things besides the camera's button's and dials and screen. Ouch.

The other half of my problem was sudden incapacitating social awkwardness. I was completely bashful about working with live, volunteer models I'd never met before. I was intimidated by going before and after professional photographers who knew their cameras and who were joking comfortably with the models. I was embarrassed at wasting everybody's time, the models and the other photographers waiting to use the same light setup. I was too wrapped up in my own awkwardness to ask the models to turn their head, or move closer to the light, or up or down. Stupid, huh? They were lovely, friendly, patient models but I was completely flustered. I think I babbled incoherent apologies at them at the end of each "shoot".

As this part of the workshop continued into our third and fourth light setups, or about two hours after quietly flipping out, I started pulling things together. Several people definitely tried to be helpful and were in fact helpful (thanks Tara and of course Zack) but I was at that point where I was too self-conscious to be very effective at accepting help. I've seen other people in that position but haven't experienced it myself recently. It's a humbling reminder for when I'm in "helper" role of saying "Oh, just do it this way". I got my focus straightened out. I started adjusting my aperture in the right direction instead of consistently doing it in the wrong direction. I got to that moment where I decided to try to alter a shot by taking down the light on the background compared to the model. I worked it through: I remembered I had to increase the shutter speed to pick up less ambient light, while maintaining an aperture that picked up the flash on the subject at the same brightness. The result, while in no way a good photo -- I mean, not at all -- was at least the product of an intention that I formulated to make use of what I'd learned.

It all went uphill from there. I started to be able to make a plan and imagine a look and adjust what part of the background was in the photos and how much light it had. I really didn't take a lot of good photos but increasingly I was able to make changes that I thought of. I took notes of what I was doing to cement the principles.

At the end, I finally got comfortable photographing one of the photographers. This was in the part of the workshop where we split into groups and set up our own lighting. Tara setup a shoot-through umbrella near a wood fence, and Bärbel was the fellow photographer who sat for my shooting, as I sat through hers:

I was now comfortable enough to ask Bärbel to move away from the fence, to give more separation between the light on her and the light on the fence, thus more control at dialing one up and one down. I was comfortable enough to move the umbrella/flash stand closer to her to her. I was still trying to be quick and didn't take a zillion shots with different facial expressions or different compositions, but since I was there to learn the lighting part that was OK. And I got this shot, and I was happy.

Thanks to our hosts who provided excellent food and a location, and the models and fellow photographers and Zack. I was too dorky to act normally but if you happen to stumble on this I want you to know I enjoyed myself and learned a lot.

Monday, May 05, 2008

I'm getting into geographical distributions and shapefiles now for the health data accessibility project. Ekr and Cullen got the basic R and maptools extension commands working for me. I tweaked it a bit and now have thumbnails and detail views (shown here, deaths attributed to HIV in white males):

One thing I've learned about freely-available shapefiles is that they have a lot of crap in them. Crap is, of course, in the eye of the beholder. In my case, I want the shapes of the regions only, and not the population, households, whites, blacks, males, females, density, age distributions, divorced, married, never married, single-parent household, household units, vacancies, mobile homes, farms, crop acres and more counts/rates, for every region.

I can probably get a shapefile editor and remove that stuff but it will only slim down the files slightly. Even bigger than all that data are the latitude and longitude of every vertex required to draw a state outline. Think coastline and all those islands in Alaska, Puget Sound, etc. Since my map sizes are limited anyway, I wonder:

One thing I've learned about freely-available shapefiles is that they have a lot of crap in them. Crap is, of course, in the eye of the beholder. In my case, I want the shapes of the regions only, and not the population, households, whites, blacks, males, females, density, age distributions, divorced, married, never married, single-parent household, household units, vacancies, mobile homes, farms, crop acres and more counts/rates, for every region.

I can probably get a shapefile editor and remove that stuff but it will only slim down the files slightly. Even bigger than all that data are the latitude and longitude of every vertex required to draw a state outline. Think coastline and all those islands in Alaska, Puget Sound, etc. Since my map sizes are limited anyway, I wonder:

- how many vertexes I could afford to lose without losing any

resolution in the larger size, and how I could find a shapefile appropriate for that - how much smaller the resulting shapefiles would be

- whether that would be any faster anyway

Thursday, May 01, 2008

The code review I got from Grant last weekend was really great, but I'm back to programming solo. Luckily, one of the nice things about code reviews is that when you explain something, you are likely to see problems with it before the person you're explaining to even finishes parsing your tech-tech-tech. And you don't even need the other person to make this work if you're disciplined enough to read code aloud all by your lonesome. (I can read code to the cat at home, but maybe I need a teddy bear at work.)

So here's how I read some code to myself yesterday:

Yeah, you can't use a generator twice in Python and I knew it, but I didn't remember it until I heard myself speaking out loud. What's up with that?

So here's how I read some code to myself yesterday:

Okay, first step here is we get a list of graphs to draw, it's a generator.

We go through each graph figuring out which datafiles we need to load so we

only load them once.

This we know is working so far because of print statements, showing two

datafiles in this case.

Now the next step, we're going through the generator again,... oh.

Yeah, you can't use a generator twice in Python and I knew it, but I didn't remember it until I heard myself speaking out loud. What's up with that?

Friday, April 25, 2008

Paul commissioned me to knit a tie-dye, Grateful-Dead-inspired, tie. I made it, he gave it to the UCSC Chancellor at a press announcement. Cool!

Paul commissioned me to knit a tie-dye, Grateful-Dead-inspired, tie. I made it, he gave it to the UCSC Chancellor at a press announcement. Cool!

Wednesday, April 23, 2008

Kids car seats are getting bigger (apart from the ultra-portable infant seats). Three reasons why, all related to new safety requirements:

I'm going on five more trips with my kid in the rest of 2008. I usually need a car seat when I get to my destination. I also need something to keep my kid in the airplane seat more securely. Even if you believe airplane crashes are so low probability that it's not worth worrying about, I can vouch for the sanity that comes from a wriggly kid well-secured in an airplane seat. I held somebody else's baby during turbulence on a recent flight (while the other mom vomited repeatedly) and I was glad my own kid was asleep and strapped into a car seat with a five-point harness. He didn't even wake up during the turbulence, because I didn't have to wake him up to hold him down during the stomach-inverting drops of altitude.

Using the car seat in the airplane has become a real problem at his current height, however. It's so bulky (thick back, thick seat) that in coach he runs out of leg room and can already powerfully kick the seat in front of him. Time to start investigating alternatives, and as I found out, the alternatives do not reasonably include lightweight, narrow, FAA-approved seats that are also legal in cars in most states.

Here's how airplanes are different from cars, as far as child restraints are concerned:

Since I still need* a car seat at my destination, I'll probably start putting the Britax Roundabout into checked luggage. *Big sigh* -- it will be years before I'm likely to travel without checked luggage again. If it weren't for carseats, I can pack my own clothing and my kid's for 3-6 days into one carry-on.

* As a final note, let me define "need" in the last paragraph. It turns out the law in BC, for example, is different for residents and visitors. Residents must use car seats for kids up to 40 pounds, but visitors can omit car seats with children over 20 pounds. On average that weight is reached at nine months! A slightly heavy baby might reach 20 pounds at an earlier age and still not be able to sit up on his own. So although the law doesn't require me to have a car seat on my upcoming trip to Vancouver, I have decided I need one.

- LATCH (Lower Anchors and Tethers for CHildren) are anchor points in cars that make installing carseats much safer since roughly 2003 when they became standard in all passenger cars. Manufacturers of car seats have made them bigger as a result. The seat needs to accommodate a strap between LATCH points as well as routing for old-fashioned seat-belts. The tether for the top of the seat, which goes over the back of the car's seat back and fastens there, has caused the manufacturers to make the back of the carseat taller and have a little more room for hardware above the kid's head.

- Around the same time as LATCH, the US has seen more stringent regulations for when car seats must be forward facing or rear facing. To save parents from buying multiple seats, there are convertible seats that can be turned around somewhere between infancy and toddler/preschool age, and still be used up to 60 pounds in some cases if the kid is not too tall. Of course, seats that can be converted from rear-facing and reclined positions to front-facing and upright positions have bulkier bases to accommodate the different LATCH points, seat belt slots and tilt options.

- New requirements and recommendations for booster seats for kids up to 6 years old and 60 pounds (or even 8 years old and 80 pounds) have been enacted in many states in the last three or four years. A pure booster seat, to raise the kid and position the belt safely, is currently thought to be best for school-age kids once they're too big to sit in a full carseat. However, in order to provide an option that parents can buy to last from pre-school through school age, many forward-facing car seats are now convertible to pure booster seats.

I'm going on five more trips with my kid in the rest of 2008. I usually need a car seat when I get to my destination. I also need something to keep my kid in the airplane seat more securely. Even if you believe airplane crashes are so low probability that it's not worth worrying about, I can vouch for the sanity that comes from a wriggly kid well-secured in an airplane seat. I held somebody else's baby during turbulence on a recent flight (while the other mom vomited repeatedly) and I was glad my own kid was asleep and strapped into a car seat with a five-point harness. He didn't even wake up during the turbulence, because I didn't have to wake him up to hold him down during the stomach-inverting drops of altitude.

Using the car seat in the airplane has become a real problem at his current height, however. It's so bulky (thick back, thick seat) that in coach he runs out of leg room and can already powerfully kick the seat in front of him. Time to start investigating alternatives, and as I found out, the alternatives do not reasonably include lightweight, narrow, FAA-approved seats that are also legal in cars in most states.

Here's how airplanes are different from cars, as far as child restraints are concerned:

- No LATCH points. I don't know if airplane seats will ever have LATCH points.

- Airplane seats are designed to recline/fold. I think this is why booster seats are forbidden by the FAA. So are seats that can convert to booster seats.

- Not as much room, obviously. It can even be hard to bring a toddler-size car seat down the aisle, let alone fit into the width and depth limitations of coach seating.

Since I still need* a car seat at my destination, I'll probably start putting the Britax Roundabout into checked luggage. *Big sigh* -- it will be years before I'm likely to travel without checked luggage again. If it weren't for carseats, I can pack my own clothing and my kid's for 3-6 days into one carry-on.

* As a final note, let me define "need" in the last paragraph. It turns out the law in BC, for example, is different for residents and visitors. Residents must use car seats for kids up to 40 pounds, but visitors can omit car seats with children over 20 pounds. On average that weight is reached at nine months! A slightly heavy baby might reach 20 pounds at an earlier age and still not be able to sit up on his own. So although the law doesn't require me to have a car seat on my upcoming trip to Vancouver, I have decided I need one.

Monday, April 21, 2008

I'm making good progress on the public health statistics visualization site. I just added the ability to use pycha's multi-line line graphs:

This shows the incidence rates of kidney cancer in the Northern California population, according to the NCCC.

This shows the incidence rates of kidney cancer in the Northern California population, according to the NCCC.

Wednesday, April 16, 2008

Whew, I finally got the right minimal equipment to do off-camera flashes. With my Canon Digital Rebel, this meant

- Canon Off Camera Shoe Cord OC-E3

- Canon 430EX Speedlite Flash for Canon Pro1

Anyway, here are my first photos with this setup, with our cat as a model because she stays still better than other live models in the household (!). The one with whitish carpet and wall as background was really the first photo, slightly cropped but otherwise unedited, just connected the flash pieces and snapped the camera in automatic portrait mode. The one with the dark background is not even in an unlit room, it just had the nice effect that the flash lit the kitty and stuff you can't see, while overwhelming the less-lit background into black.

Only three weeks until Zack Arias' One Light Workshop. Hat tip to Ted Leung for letting me know about that workshop and inspiring me to try all this.

The site I'm building will make public health data more accessible. This means both findable and visualizable. Kai just came by my office and demonstrated a use case for both aspects of accessibility for this data.

Kai's task this morning is to put together a presentation which involves talking about the "long tail" of diseases: for every common disease that pharmaceutical companies target, there are a hundred orphan diseases. Pharmaceuticals typically ignore these because the revenues from a drug targeting an orphan disease are necessarily small.

A good graphic for Kai's presentation would be a classic long tail graph, with specific incidence rates filled in: possibly lung cancer on the left with a high incidence, tuberculosis in the middle with a vastly lower incidence, and Ebola at the real tail end. But how do you fill in the numbers for incidence of these diseases -- say, for the US, for a given year?

Kai's task this morning is to put together a presentation which involves talking about the "long tail" of diseases: for every common disease that pharmaceutical companies target, there are a hundred orphan diseases. Pharmaceuticals typically ignore these because the revenues from a drug targeting an orphan disease are necessarily small.

A good graphic for Kai's presentation would be a classic long tail graph, with specific incidence rates filled in: possibly lung cancer on the left with a high incidence, tuberculosis in the middle with a vastly lower incidence, and Ebola at the real tail end. But how do you fill in the numbers for incidence of these diseases -- say, for the US, for a given year?

- National Cancer registries cover only cancer incidence and mortality

- CDC Mortality data covers all diseases, but mortality instead of incidence (e.g. prostate cancer is very common but not a common cause of death)

- CDC tracks infectious disease incidence, but not incidence of most non-infectious diseases

- The National Heart, Lung and Blood Institute publishes incidence of hemophilia

- The National Health Interview Survey counts things like anemia, and while you can find that page on the CDC site, the data are presented very different from data on cancer or infectious disease...

Tuesday, April 15, 2008

In order to keep my life and work organized, the tool I use to track tasks has to be streamlined and seamless. Do you know what I mean? Any hassle or delay, and I'm likely to get distracted or postpone the bookkeeping of adding a new task or updating the status of a task. If I'm hampered in bookkeeping, I fall behind and the system becomes less trustworthy so I can even fall out of the habit of keeping it up-to-date simply because it's already not up-to-date. It requires discipline as well as ease-of-use.

My system is not just one single task list. That would be too long. Instead, it's organized roughly as "Getting Things Done" suggests, into contexts. This is crucial, for reasons I won't go into now. So one of my requirements is that I have several groups of tasks, and a task can only be in one group/context. Another requirement is that I be able to mark tasks as "done" to make them disappear (but not deleted).

For three years now I've been using iOrganize. It's a small and reliable note-taking program that I don't mind running constantly in order to do bookkeeping at a moment's notice. It has folders for "notes", and lists notes by title within a folder. Click on a note and a view pane shows the body text. I have a couple conventions to make this function as a task manager: I mark the tasks that have top priority with a ** so they show up in a quick search, and I drag a note to the _Done folder (which sorts last with the underscore) to mark as done.

The thing is, I unthinkingly upgraded to a new version when prompted, and a few things are driving me crazy, and worse, driving me towards dis-use.

I have been experimenting this morning with TextMate's awesome extensibility to see if I could use it for a task manager since I run it constantly anyway, but am running into barriers. Can I downgrade my running version of iOrganize instead?

My system is not just one single task list. That would be too long. Instead, it's organized roughly as "Getting Things Done" suggests, into contexts. This is crucial, for reasons I won't go into now. So one of my requirements is that I have several groups of tasks, and a task can only be in one group/context. Another requirement is that I be able to mark tasks as "done" to make them disappear (but not deleted).

For three years now I've been using iOrganize. It's a small and reliable note-taking program that I don't mind running constantly in order to do bookkeeping at a moment's notice. It has folders for "notes", and lists notes by title within a folder. Click on a note and a view pane shows the body text. I have a couple conventions to make this function as a task manager: I mark the tasks that have top priority with a ** so they show up in a quick search, and I drag a note to the _Done folder (which sorts last with the underscore) to mark as done.

The thing is, I unthinkingly upgraded to a new version when prompted, and a few things are driving me crazy, and worse, driving me towards dis-use.

- The search function now only searches in the current folder. This breaks my ability to quickly use search for ** to find top tasks. Even though the functionality to search all folders is still there, it now won't show me which folder the search results are in.

- The drag to the _Done folder now changes the view to the _Done folder, when it used to (and I really want to) keep the view on the source folder, the context where I'm getting stuff done.

I have been experimenting this morning with TextMate's awesome extensibility to see if I could use it for a task manager since I run it constantly anyway, but am running into barriers. Can I downgrade my running version of iOrganize instead?

Monday, April 14, 2008

Shortest recognizable quotes

Three words

Three words

- I'll be back

- Feed me, Seymour

- Yippee Kiyay, M*F*

- Et tu, Brute

- Phone's ringing, Dude

- Run, Forrest! Run!

- As you wish.

- Clatto... Verata... Necktie?

- God is dead.

- A Handbag!

- Inconceivable!

- Stella!

- Doh!

Saturday, April 12, 2008

Three thumbs up for the Chariot Cougar 2 I bought a couple weeks ago. When you use something day to day the smoothness of use matters a lot:

You may ask if I'm turning into a diligent environmentalist, abandoning even my Prius in order to bike to work and the grocery store. Ok, well even if you don't ask, I'll tell you, there's are different things mixed into the satisfaction return of this practice. There's saving gas, yes, and knowing where I can find a spot to park without frustration. There's the physical well-being from exercise and feeling sun on my face or at least fresh air. I like to think I look pretty cool on the sharp red bike with red trailer, so yes there's a bit of pride too! Whatever keeps me pedaling.

- One thumb for the easy way it attaches to the bike. It's got a ball joint to slip into a socket, held with a pin, and the pin is held with a rubber cover, and then a safety strap in case all that fails, but it's still fast to put together. Somehow with the Burley I never got snappy at getting everything all at the right angles.

- One thumb for the carrying capacity and organization thereof. I went to the grocery store today with D. and came back with two big (cloth) bags plus a watermelon. There's two exterior pouches in back, one of them very big yet it folds up when not in use, and small side pouches inside the cab for the spare yoke or Darwin's stuff.

- One thumb (Darwin's) for the comfortable, secure safety restraints. The chest yoke comes down over his head and snaps to a strap between his legs, and the seat belt snaps over that. Snap, snap, done.

You may ask if I'm turning into a diligent environmentalist, abandoning even my Prius in order to bike to work and the grocery store. Ok, well even if you don't ask, I'll tell you, there's are different things mixed into the satisfaction return of this practice. There's saving gas, yes, and knowing where I can find a spot to park without frustration. There's the physical well-being from exercise and feeling sun on my face or at least fresh air. I like to think I look pretty cool on the sharp red bike with red trailer, so yes there's a bit of pride too! Whatever keeps me pedaling.

Tuesday, April 08, 2008

Programmers sometimes shave yaks. But fiber artists sometimes engage in yak [hair] spinning, plying, winding and fulling -- and best of all, yak whacking. Yea, verily. Not sure which I'd rather do or not do right now.

Unit testing and doc testing are working in the Django-based project. A couple wrinkles:

1. In the unit tests, I created some model instances in the setUp() method easily. I then discovered that the setUp() method is called before every method in the test class, not just once when the test class is instantiated. Ah yes, I remember this from Java days too. Is there some deep reason why tests (in this case functional tests) shouldn't share the exact same setup but need to recreate it? The fix that seemed to meld most smoothly with what the unit testing framework offered, was to tearDown() all the model instances after every test method, even if I was going to use the same work for the next test.

2. Django only seems to pick up doctests in the models module. I found a hint online (can't even find it anymore) about creating a "__test__" dictionary and putting this in models.py worked:

In this case Source is a class in models.py, and DataFrame is a class imported from a file called query.py. The labels "1" and "2" are arbitrary and I don't yet know how they're used. What worries me about this is that I don't want to import everything into the models module in order to test it.

1. In the unit tests, I created some model instances in the setUp() method easily. I then discovered that the setUp() method is called before every method in the test class, not just once when the test class is instantiated. Ah yes, I remember this from Java days too. Is there some deep reason why tests (in this case functional tests) shouldn't share the exact same setup but need to recreate it? The fix that seemed to meld most smoothly with what the unit testing framework offered, was to tearDown() all the model instances after every test method, even if I was going to use the same work for the next test.

2. Django only seems to pick up doctests in the models module. I found a hint online (can't even find it anymore) about creating a "__test__" dictionary and putting this in models.py worked:

__test__ = {"1": Source, "2": DataFrame}In this case Source is a class in models.py, and DataFrame is a class imported from a file called query.py. The labels "1" and "2" are arbitrary and I don't yet know how they're used. What worries me about this is that I don't want to import everything into the models module in order to test it.

Monday, April 07, 2008

Thursday, April 03, 2008

Friday, March 28, 2008

I miss working closely with other developers; that's part of why I'm blogging right now.

Python exacerbates that, because there's so many ways to do things, particularly the kinds of things I'm doing right now, like parsing text files with tables in human-readable but not-particularly-computer-readable formats.

Here's a tiny example: I needed to remove commas from strings like "1,207" before converting them to integers to graph them. I thought of using slicing, which is a powerful Python feature on lists, strings and more:

To find good ways of doing things, I end up browsing a lot of Python code online. That's frustrating because some sites have started hosting "sample code" mostly as a way to put advertisements on-screen and in pop-ups. And I'm sure the most trivial code review would identify plenty of areas my code could be much more Python-clever.

Python exacerbates that, because there's so many ways to do things, particularly the kinds of things I'm doing right now, like parsing text files with tables in human-readable but not-particularly-computer-readable formats.

Here's a tiny example: I needed to remove commas from strings like "1,207" before converting them to integers to graph them. I thought of using slicing, which is a powerful Python feature on lists, strings and more:

>>> string="1,207"It got ugly fast so I looked for something else:

>>> string[:string.find(',')] + string [string.find(',')+1:]

'1207'

>>> string="1,207"Splits are also powerful:

>>> string.replace(',','')

'1207'

>>> string = "1,207"Of course I could also define a simple "removefrom(char, string)" function:

>>> ''.join(string.split(','))

'1207'

>>> string="1,207"

>>> def removefrom(char, string):

... return string.replace(',','')

...

>>> removefrom(',', string)

'1207'

To find good ways of doing things, I end up browsing a lot of Python code online. That's frustrating because some sites have started hosting "sample code" mostly as a way to put advertisements on-screen and in pop-ups. And I'm sure the most trivial code review would identify plenty of areas my code could be much more Python-clever.

Thursday, March 20, 2008

Internships for CommerceNet this summer: I'll be the one hiring and managing. If we get a great proposal for a project from the right intern candidate, CommerceNet might simply hire that intern to work on that project. Otherwise, here's what I have in mind.

CommerceNet is an entrepreneurial research institute, dedicated to fulfill the promise of the Internet. We are currently seeking Software Engineer interns to implement a data visualization Web application for public health information. Involves JavaScript and Python, both data access and graphics. CommerceNet may also accept proposals for internships to work on well-specified projects of the intern's own design.

What you'll doRequired Skills:

- Develop open source libraries or widgets for graphing and data visualization

- Build public service, community oriented Web site

- Be part of a small team or work nearly independently

- Develop with minimal guidance, using rapid iteration and feedback loop and with leeway in choices of tools.

- Borrow, create or collaborate on visual design and visual elements

Email ldusseault@commerce.net with questions or cover letter and resume.

- Web Applications development, including CSS and JavaScript

- Python or demonstrated ability to pick up languages

- MySQL or similar data management experience

- Great ability to extrapolate from raw ideas to realistic implementations.

- Demonstrated initiative pulling a project forward

- Some experience using graphics libraries

- Familiarity with Cleveland or Tufte principles would be a bonus

Tuesday, March 18, 2008

There is so much IETF work on email these days. There are five separate WGs (one in the Security area), a Research Group and a couple informal efforts. I tend to have to summarize the work to pull it together in my head. Here's the post-71st-IETF sitrep. Also see Barry's post for more detail on SIEVE, DKIM, LEMONADE and ASRG. Document links collected at bottom.

- The IMAPEXT WG is so close to shutting down, it did not meet last week. One of its work items was to internationalize parts of IMAP (including mailbox names, and how to sorting strings like subjects) and those documents were delayed but finally got approved.

- The LEMONADE WG met, and seems to be winding down. Although its extensions are all linked by being useful to mobile email clients, there are some extensions there of general interest.

- The SIEVE WG just finished publishing a whack of documents around its new core spec, RFC5228. At its meeting, the group discussed whether to recharter to do another round on the core SIEVE documents and standardize some more filtering extensions.

- EAI WG has requested publication for most of its documents. These are Experimental Standards for using non-ASCII characters in email addresses, which affects IMAP, POP3, SMTP in interconnected and complicated ways.

- A design team is nearly done updating SMTP (RFC2821) and the Internet Message Format standard (RFC2822). They're handling "last call" issues on the list.