I put a bunch of work into a chart to knit myself a hat, so I decided to follow through and make it a pattern.

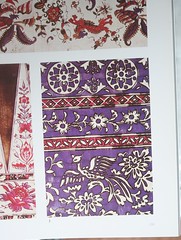

I was inspired by this fabric in a book of mine:

I knit it up and blocked it:

Added tassels:

And modeled it myself, too. All over Thanksgiving weekend.

Here's the pattern.

Tuesday, November 29, 2011

Thursday, September 08, 2011

The Story of Snow White and the Seven Dwarves and R2-D2

As requested by a four-and-a-half-year-old: "Yeah, tell me that one. Snow White. And put R2-D2 in it. And R2-D2 has to use his cannon."

Once upon a time, in a land far, far away, lived a young princess named Snow White. She was called Snow White because of her pale white skin. Her mother was dead, and her father was the king. One day her father remarried, and the new queen did not like Snow White. After a while it was clear that Snow White was just in her way. Snow White's best friend and trusty companion was R2-D2 the droid.

Then when Snow White was about sixteen, her father, the king, died suddenly. He left his kingdom to his daughter, but the step-mother intervened and ran things. She made evil choices that made the people of the kingdom work harder, and give more of their money to the queen, and go hungry. Snow White did not like this. Nor did R2-D2.

One day, R2-D2 was rolling along a castle corridor, when, with his fine droid sensors, he heard a conversation between the Evil Queen and a woodcutter. The Evil Queen was saying: "I can't take it any more. Snow White is criticizing my treatment of the rabble, and some of my ministers are agreeing with her. You must kill her when she's out in the forest today. Say that wolves got her."

R2-D2 went immediately to Snow White, of course, and warned her. He recommended that Snow White should escape the castle, to avoid being killed. Meanwhile R2-D2 would call in his friend the Prince from another country, who would come and help them deal with the Evil Queen. R2-D2 explained to Snow White how she could find the Seven Dwarves, who lived together and would hide her until it was safe to return to the castle.

Snow White went out into the forest earlier than usual and evaded the woodcutter. She found the Seven Dwarves' cottage, and asked for their help. Of course they agreed and said she could stay. The Seven Dwarves were Grumpy, Sleepy, Sneezy, Doc, Nosey, Geeky, and Skip. Each day they went away to work in the mines, and came back in the evening. Snow White started to help out by cleaning up after breakfast, and preparing a nice supper for when they came back, so the Seven Dwarves grew to love her indeed.

The Evil Queen knew that Snow White was now hiding, so she came up with a different plan. She used magic to disguise herself as a poor old woman selling apples. She put one special apple in her basket, just for Snow White; it had poison. She went around the area of the castle, asking if anybody had seen a pretty young girl, new in the area. Eventually she found a village where some people had noticed a pretty young girl who had recently started living with the Seven Dwarves, and so she went to investigate. She found Snow White, all alone in the middle of the day.

"Hello, my deary", she said. "Would you like to buy some apples?"

"Oh, those look delicious," Snow White answered. "But I have no money, I'm sorry."

"Well, you're such a pretty dear, " the Evil Witch disguised as a poor old woman said, "I'll give you this apple if you give me the pleasure of eating it. An old woman like me doesn't enjoy life as much as you young folks, so I'll just take a break and watch you eat this apple." And she handed Snow White the apple prepared with poison.

Snow White took a bite of the apple and before she even finished chewing it, she swooned. That means, she fell over, right onto the ground, as if asleep -- or dead. The Evil Queen laughed and went back to the castle, convinced that Snow White was dead.

Meanwhile, don't forget, R2-D2 had sent a message to his friend, the Prince from another country. It took a long time in those days for a message to get to the other country, and for the Prince to ride to Snow White's country. The Prince was just arriving when R2-D2 heard the news the Evil Queen was spreading -- that Snow White was not just missing, but found dead, and that the Evil Queen was going to rule the country.

R2-D2 rolled out to meet the Prince. When the two friends found each other on the road, R2-D2 led the Prince to the Seven Dwarves cottage. The Seven Dwarves had just arrived, and were moaning sadly about poor Snow White.

With his sensors, R2-D2 could tell that Snow White was just breathing very slightly, only barely alive. In droid speak (doot dweet peep brrrrr peep peep doot) he instructed the Prince to lift Snow White onto the table so that R2-D2 could use his gripper arm to open Snow White's mouth. R2-D2 found pieces of apple in her mouth, because she hadn't even finished swallowing the apple, so he took them out. Then he instructed the Prince in how to sit Snow White up to whack her back, to dislodge more pieces of apple. Together, they got enough of the poison apple out of Snow White so that she could recover.

The next day, Snow White, the Prince, and R2-D2 headed to the castle. Snow White took a bow and arrows just in case, and the Prince had his sword. When they arrived at the castle, the gates were locked and the Evil Queen was instructing the guards not to let them in.

"She's not the real Snow White! She's an impostor! Keep her out of the castle!" she yelled to the guards.

"I am the real Snow White!" Snow White shouted back. "You guards, you know me. I grew up in this castle!" And the did know her, so some of them put down their swords, and their bows and arrows. The Evil Queen grabbed one of them and tried to threaten his life with her dagger, so he would obey her, so Snow White shot a warning arrow. The Evil Queen had to hide from the arrows so she let the guards go.

The gate was still locked, so R2-D2 used his cannon to blast it open. Boom! The gate crashed open, and the threesome walked into the castle through the gate. But the Evil Queen was waiting for them, and she tried to jump on Snow White and kill her with a dagger. Luckily, the Prince was ready with his sword, and he swung it to protect Snow White, and cut off the Evil Queen's head.

Snow White thanked the Prince for saving her, although she said "You shouldn't have killed her. We should have brought the Evil Queen to justice and put her in prison.".

"I know," said the Prince, "But it was an accident. It was in defense."

"That's true," said Snow White. "Say, you seem like a nice guy. How about you stay here with me? I'm going to rule this kingdom, and do a much better job than the Evil Queen, too. If you give me good advice, and help me rule this kingdom, maybe we could get married. "

"That sounds like a good idea," said the Prince.

R2-D2 said "Peeeeep doot dweet-deet doot brrrrr peep!"

And they all lived happily ever after.

As requested by a four-and-a-half-year-old: "Yeah, tell me that one. Snow White. And put R2-D2 in it. And R2-D2 has to use his cannon."

Once upon a time, in a land far, far away, lived a young princess named Snow White. She was called Snow White because of her pale white skin. Her mother was dead, and her father was the king. One day her father remarried, and the new queen did not like Snow White. After a while it was clear that Snow White was just in her way. Snow White's best friend and trusty companion was R2-D2 the droid.

Then when Snow White was about sixteen, her father, the king, died suddenly. He left his kingdom to his daughter, but the step-mother intervened and ran things. She made evil choices that made the people of the kingdom work harder, and give more of their money to the queen, and go hungry. Snow White did not like this. Nor did R2-D2.

One day, R2-D2 was rolling along a castle corridor, when, with his fine droid sensors, he heard a conversation between the Evil Queen and a woodcutter. The Evil Queen was saying: "I can't take it any more. Snow White is criticizing my treatment of the rabble, and some of my ministers are agreeing with her. You must kill her when she's out in the forest today. Say that wolves got her."

R2-D2 went immediately to Snow White, of course, and warned her. He recommended that Snow White should escape the castle, to avoid being killed. Meanwhile R2-D2 would call in his friend the Prince from another country, who would come and help them deal with the Evil Queen. R2-D2 explained to Snow White how she could find the Seven Dwarves, who lived together and would hide her until it was safe to return to the castle.

Snow White went out into the forest earlier than usual and evaded the woodcutter. She found the Seven Dwarves' cottage, and asked for their help. Of course they agreed and said she could stay. The Seven Dwarves were Grumpy, Sleepy, Sneezy, Doc, Nosey, Geeky, and Skip. Each day they went away to work in the mines, and came back in the evening. Snow White started to help out by cleaning up after breakfast, and preparing a nice supper for when they came back, so the Seven Dwarves grew to love her indeed.

The Evil Queen knew that Snow White was now hiding, so she came up with a different plan. She used magic to disguise herself as a poor old woman selling apples. She put one special apple in her basket, just for Snow White; it had poison. She went around the area of the castle, asking if anybody had seen a pretty young girl, new in the area. Eventually she found a village where some people had noticed a pretty young girl who had recently started living with the Seven Dwarves, and so she went to investigate. She found Snow White, all alone in the middle of the day.

"Hello, my deary", she said. "Would you like to buy some apples?"

"Oh, those look delicious," Snow White answered. "But I have no money, I'm sorry."

"Well, you're such a pretty dear, " the Evil Witch disguised as a poor old woman said, "I'll give you this apple if you give me the pleasure of eating it. An old woman like me doesn't enjoy life as much as you young folks, so I'll just take a break and watch you eat this apple." And she handed Snow White the apple prepared with poison.

Snow White took a bite of the apple and before she even finished chewing it, she swooned. That means, she fell over, right onto the ground, as if asleep -- or dead. The Evil Queen laughed and went back to the castle, convinced that Snow White was dead.

Meanwhile, don't forget, R2-D2 had sent a message to his friend, the Prince from another country. It took a long time in those days for a message to get to the other country, and for the Prince to ride to Snow White's country. The Prince was just arriving when R2-D2 heard the news the Evil Queen was spreading -- that Snow White was not just missing, but found dead, and that the Evil Queen was going to rule the country.

R2-D2 rolled out to meet the Prince. When the two friends found each other on the road, R2-D2 led the Prince to the Seven Dwarves cottage. The Seven Dwarves had just arrived, and were moaning sadly about poor Snow White.

With his sensors, R2-D2 could tell that Snow White was just breathing very slightly, only barely alive. In droid speak (doot dweet peep brrrrr peep peep doot) he instructed the Prince to lift Snow White onto the table so that R2-D2 could use his gripper arm to open Snow White's mouth. R2-D2 found pieces of apple in her mouth, because she hadn't even finished swallowing the apple, so he took them out. Then he instructed the Prince in how to sit Snow White up to whack her back, to dislodge more pieces of apple. Together, they got enough of the poison apple out of Snow White so that she could recover.

The next day, Snow White, the Prince, and R2-D2 headed to the castle. Snow White took a bow and arrows just in case, and the Prince had his sword. When they arrived at the castle, the gates were locked and the Evil Queen was instructing the guards not to let them in.

"She's not the real Snow White! She's an impostor! Keep her out of the castle!" she yelled to the guards.

"I am the real Snow White!" Snow White shouted back. "You guards, you know me. I grew up in this castle!" And the did know her, so some of them put down their swords, and their bows and arrows. The Evil Queen grabbed one of them and tried to threaten his life with her dagger, so he would obey her, so Snow White shot a warning arrow. The Evil Queen had to hide from the arrows so she let the guards go.

The gate was still locked, so R2-D2 used his cannon to blast it open. Boom! The gate crashed open, and the threesome walked into the castle through the gate. But the Evil Queen was waiting for them, and she tried to jump on Snow White and kill her with a dagger. Luckily, the Prince was ready with his sword, and he swung it to protect Snow White, and cut off the Evil Queen's head.

Snow White thanked the Prince for saving her, although she said "You shouldn't have killed her. We should have brought the Evil Queen to justice and put her in prison.".

"I know," said the Prince, "But it was an accident. It was in defense."

"That's true," said Snow White. "Say, you seem like a nice guy. How about you stay here with me? I'm going to rule this kingdom, and do a much better job than the Evil Queen, too. If you give me good advice, and help me rule this kingdom, maybe we could get married. "

"That sounds like a good idea," said the Prince.

R2-D2 said "Peeeeep doot dweet-deet doot brrrrr peep!"

And they all lived happily ever after.

Tuesday, August 30, 2011

My third post on doing RESTish JSON APIs in Rails is about testing the API. I'm assuming the network API should remain stable, not have fields added or removed without consideration (and ideally, documentation), especially if there are independently-written clients that use the server's API. So normal Rails "functional" testing is not enough here.

"Functional" testing is in scare quotes because it's got a specific meaning to Rails, not a generally-accepted meaning to programmers. It is how Rails defaults encourage programmers to test the actions of their controller classes, which is to say the results of their Web request handling (e.g. in the Rails guide, especially the section on testing views).

It gets pretty tedious to assert all the things are in a JSON response that should be:

assert_response :success data = JSON.parse(@response.body)["data"] assert_not_nil @data["comments"] assert_equal 1, @data["comments"].length assert_equal "The User", @data["comments"][0]["display_name"] assert_equal "the comment text", @data["comments"][0]["comment_text"]

Worse, this only tests that things that are tested for are there, not that new things don't creep in. It's pretty easy to modify code in a way that passes what looked like reasonable functional tests, but breaks the client.

While working on improving our testing for API responses, I've found a few things are important to me.

- The tests should show what an entire response ought to look like (aids readability)

- The tests should flag things that aren't supposed to be there as bugs

- The test responses should be templates, so that variables can vary

- The output of a test should show the difference, so I don't have to wade through pages of desired output and pages of actual output

- Cucumber to describe the request, the response status and the response body

- Custom cucumber step definitions to let the step define the entire response body as a Ruby Hash

- A custom template "Wildcard" object to let variables vary within the response body

- Rails feature to compare hashes and find diff, to produce readable output

Feature: Commenting

Background:

Given the following post exists:

| id | 1234 |

And the following comment exists:

| id | 882281 |

| post_id | 1234 |

| comment_text | "O gentle son, upon the heat and flame of thy distemper sprinkle cool patience." |

| user_id | 101 |

Scenario: Retrieve a comment

When I GET "/posts/1234/comments/882281" with a user session

Then the response should have the following comment:

"""

{

"comment_text"=> "O gentle son, upon the heat and flame of thy distemper sprinkle cool patience.",

"created_at"=> Wildcard.new(String),

"updated_at"=> Wildcard.new(String),

"creator" => {

user_id => 101,

handle => "gertrude",

display_name => "Queen Gertrude",

number_comments => Wildcard.new(Fixnum)

},

"post_url" => Wildcard.new(String)

}

"""

The Wildcard class looks like this:

class Wildcard

def initialize(must_be_class=Object)

@must_be_class = must_be_class

end

def ==(other)

other.class == @must_be_class

end

end

And the Cucumber step that implements "the response should have the following comment" looks like this:

Then /^the response should have the following ([^"]*):$/ do |element, string|

the_hash = eval(string)

response_data = JSON.parse(@response.body)["data"][element]

assert_equal({}, response_data.diff(the_hash))

end

Note, what this means is that our API responses have "data" as the top element, with a "comment" element inside -- that part is asserted to be there, so that the contents of the comment can be compared with the Rails hash diff method. If the comment element in the response had an extra child, this would produce an error with the extra child in the diff. If the comment was missing a child, this would produce an error with the extra child in the diff. And if the comment has a child with a different value than what's in the template, this would produce an error with the returned value in the diff.

I realize this is not Behavior Driven Development (BDD). One can use Cucumber to do BDD, but the two are not inextricably linked. And our intent here is not to do BDD but to maintain a stable API. I think this works.

Monday, August 29, 2011

This is another post outlining "How I spent several days frustratingly getting something to work" so that "you don't have to". I think the theory behind these kinds of posts is the "you don't have to" part but the reality is "OMG I have to rant and boy it would help put this behind me if I could pretend there was some USEFUL lesson."

I already posted that I've been using Jenkins and RVM. The theory is great: Jenkins lets you set up lots of recurring jobs and have one dashboard to see that all of your software is basically working, while RVM allows you to choose a different Ruby version and gemset for each project that might need that.

The first Jenkins job I set up seemed to use the RVM Ruby-1.8.7 just fine, but when I tried to set up a second job that required Ruby-1.9.2, I never could get it working. First it tried to use the system ruby, which I blew away. Then I had hours tracking down references to the system ruby. The command "rvm use 1.9.2" appeared to work and chose the right rvm ruby directory. But even when it worked, when the very next command run by jenkins was "ruby -v", the machine either couldn't find the ruby install at all, or found the system one, but never the one just successfully activated by RVM. Even more frustrating, this worked at the command line, but not when running a job from Jenkins. (If anybody can explain how Jenkins runs jobs differently and what effect that has on context, I'd love to know -- having a model of what's going on would help.)

Finally I discovered "rvm-shell", which can force execution of a command within the context of what RVM set up. So now every line of the jenkins job script that uses ruby, bundle or rake, is wrapped in that:

It worked. 56 is the magic number -- that is, build #56 was the first one to work on this project. Phew.

I already posted that I've been using Jenkins and RVM. The theory is great: Jenkins lets you set up lots of recurring jobs and have one dashboard to see that all of your software is basically working, while RVM allows you to choose a different Ruby version and gemset for each project that might need that.

The first Jenkins job I set up seemed to use the RVM Ruby-1.8.7 just fine, but when I tried to set up a second job that required Ruby-1.9.2, I never could get it working. First it tried to use the system ruby, which I blew away. Then I had hours tracking down references to the system ruby. The command "rvm use 1.9.2" appeared to work and chose the right rvm ruby directory. But even when it worked, when the very next command run by jenkins was "ruby -v", the machine either couldn't find the ruby install at all, or found the system one, but never the one just successfully activated by RVM. Even more frustrating, this worked at the command line, but not when running a job from Jenkins. (If anybody can explain how Jenkins runs jobs differently and what effect that has on context, I'd love to know -- having a model of what's going on would help.)

Finally I discovered "rvm-shell", which can force execution of a command within the context of what RVM set up. So now every line of the jenkins job script that uses ruby, bundle or rake, is wrapped in that:

rvm use 1.9.2@profiles rvm-shell -c "ruby -v" rvm-shell -c "bundle install" rvm-shell -c "rake db:migrate" # Finally, run your tests rvm-shell -c "rake"

It worked. 56 is the magic number -- that is, build #56 was the first one to work on this project. Phew.

Wednesday, August 10, 2011

I've been setting up Jenkins to run Rails project unit and functional tests as builds. This has been difficult, and 90% of the problems have been in getting different versions of various gems available. RVM is supposed to help, as is Bundler. I should have started out with a very methodical plan for giving access to the jenkins user, then having the jenkins user install rvm and create gemsets, then have the jenkins user install gems and run bundle install. Instead I got things working quickly with super-user permissions and then builds fail because it's hard to 'sudo' commands during an automated build.

Here's one problem running "bundle install" as the jenkins user:

Here's one problem running "bundle install" as the jenkins user:

Using rails (3.0.4)

Using right_http_connection (1.3.0) from git://github.com/rightscale/right_http_connection.git (at master) /usr/lib/ruby/1.8/open-uri.rb:32:in `initialize': Permission denied - right_http_connection-1.3.0.gem (Errno::EACCES)

from /usr/lib/ruby/1.8/open-uri.rb:32:in `open_uri_original_open'

from /usr/lib/ruby/1.8/open-uri.rb:32:in `open'

from /usr/local/lib/site_ruby/1.8/rubygems/builder.rb:73:in `write_package'

from /usr/local/lib/site_ruby/1.8/rubygems/builder.rb:38:in `build'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/source.rb:450:in `generate_bin'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/source.rb:450:in `chdir'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/source.rb:450:in `generate_bin'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/source.rb:559:in `install'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/installer.rb:58:in `run'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/rubygems_integration.rb:93:in `with_build_args'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/installer.rb:57:in `run'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/installer.rb:49:in `run'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/installer.rb:8:in `install'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/cli.rb:220:in `install'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/vendor/thor/task.rb:22:in `send'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/vendor/thor/task.rb:22:in `run'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/vendor/thor/invocation.rb:118:in `invoke_task'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/vendor/thor.rb:263:in `dispatch'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/lib/bundler/vendor/thor/base.rb:386:in `start'

from /usr/local/rvm/gems/ruby-1.8.7-p352@sonicnet/gems/bundler-1.0.17/bin/bundle:13

from /usr/bin/bundle:19:in `load'

from /usr/bin/bundle:19

After reading up on similar but different errors via Google, I concluded this was probably a local filesystem permission error. I tried granting the jenkins user various permissions in /usr/lib/ruby and /usr/local/rvm/gems -- one misleading clue was that the "right_http_connection-1.3.0.gem" file in /usr/lib/ruby/1.8/gems/cache was the only gem in that directory with a different user/group than the other gems. Fixing that didn't fix the problem, nor did deleting that gem file. I then realized that bundler puts gems in a different place: .bundle in the project directory. Oh, that's owned by root -- great, fix that. Oops, didn't work. Finally I deleted .bundle and ran bundle install again -- as the jenkins user -- and it worked.

(This is probably boring to most readers but it's a small payment for all the other bloggers' posts I've read that gave me clues in various debugging dead-ends. )

Friday, July 22, 2011

This week I was asked to review draft-ietf-hybi-thewebsocketprotocol-10, The Websocket Protocol, as it gets closer to being an IETF RFC. After I sent my review, I was prompted to look back and reminded myself that I wrote the first message to apps-review back when it had a separate mailing list, asking for the first review from the newly-formed team. This explains why I'm still reviewing IETF documents despite having no current IETF activity -- I feel I owe a few people a few reviews in return.

Websockets itself also brought back the memories. I approved the BOF, the "Birds of a Feather" meeting that organized the HyBi Working Group (WG), and approved the formation of the WG. I felt it was very important that the Websocket protocol be developed in a venue where we had server developers and transport and security experts as well as browser developers. We also had to ensure that the protocol was considered quite separate from the Websocket API which is part of HTML5. Much painful experience shows that while protocol libraries are hard enough to upgrade, the protocols stay around for long and are even harder to fix or replace, so backward compatibility, versioning and extensibility are crucial.

While IETF progress for Websocket protocol was, as almost everything at the IETF is, agonizingly slow, I was glad to see that the near-end result has pretty solid HTTP compliance, solid framing and transport text, and deals with security concerns that weren't even on the radar before the IETF effort. Personal kudos to Joe Hildebrand, Alexey Melnikov, Ian Fette, Ian Hickson, Adam Barth and Salvatore Loreto, and kudos to everybody else who contributed.

Websockets itself also brought back the memories. I approved the BOF, the "Birds of a Feather" meeting that organized the HyBi Working Group (WG), and approved the formation of the WG. I felt it was very important that the Websocket protocol be developed in a venue where we had server developers and transport and security experts as well as browser developers. We also had to ensure that the protocol was considered quite separate from the Websocket API which is part of HTML5. Much painful experience shows that while protocol libraries are hard enough to upgrade, the protocols stay around for long and are even harder to fix or replace, so backward compatibility, versioning and extensibility are crucial.

While IETF progress for Websocket protocol was, as almost everything at the IETF is, agonizingly slow, I was glad to see that the near-end result has pretty solid HTTP compliance, solid framing and transport text, and deals with security concerns that weren't even on the radar before the IETF effort. Personal kudos to Joe Hildebrand, Alexey Melnikov, Ian Fette, Ian Hickson, Adam Barth and Salvatore Loreto, and kudos to everybody else who contributed.

Wednesday, July 13, 2011

Resourceful != RESTful

Resourceful routing in Rails is certainly useful. As is customary in Rails, you get a lot of stuff achieved with a very small amount of declarations. And at first glance it looks like REST! Yay! Except... it will lead one slightly astray. I've come across a few ways in which "resourceful" routing in Rails doesn't really follow REST principles, in ways that aren't merely theoretic but can affect intermediary behavior.

Resourceful routing defines paths or routes for objects that the server developer would like to have manipulated with CRUD operations. The developer can declare an 'invitation' to be a resource, and the routes to index all invitations, create a new invitation, download an invitation, update or delete the invitation, are automatically created. But, problem the first:

All routes share common error handling. Route not found? Return 404! But Rails puts the method in as part of the route definition. Thus, problem the second:

Resourceful routing in Rails is certainly useful. As is customary in Rails, you get a lot of stuff achieved with a very small amount of declarations. And at first glance it looks like REST! Yay! Except... it will lead one slightly astray. I've come across a few ways in which "resourceful" routing in Rails doesn't really follow REST principles, in ways that aren't merely theoretic but can affect intermediary behavior.

Resourceful routing defines paths or routes for objects that the server developer would like to have manipulated with CRUD operations. The developer can declare an 'invitation' to be a resource, and the routes to index all invitations, create a new invitation, download an invitation, update or delete the invitation, are automatically created. But, problem the first:

- Resourceful routing uses POST to create a new resource. PUT is defined as creating a new resource in HTTP, and intermediaries can use that information-- but only if PUT is used. If POST is used, an intermediary can't tell that a new resources was created.

All routes share common error handling. Route not found? Return 404! But Rails puts the method in as part of the route definition. Thus, problem the second:

- Rails returns 404 Not Found if the client uses an unsupported method on a resource that exists. So for example if I apply resourceful routing such that a resource can be downloaded with GET and updated with PUT, but don't define a POST variant route for that URL, then when the client tries to POST to that URL the server returns 404 because the route (with correct method) was not found. In theory an intermediary could mark the resource as missing and delete its cached representation. Instead, there's a perfectly good error to use in HTTP when a method is not supported on a URL, and that is 405 Method Not Allowed.

- URL parameters are mixed with body parameters. This may not cause problems for a POST, where the response typically isn't cachable anyway. But it's a bad choice in for GET requests, where the URL containing parameters affects caching, while other parameters are unseen by intermediaries.

- Query parameters are treated the same as path elements. Routes are defined as paths that can have parameters in them. So if the routes file defines a route for "/v1/store/buy/:product/with_coins", the :product path element could be any string, and Rails will pass that string into the application just as if it were a URL query parameter. However, caches are supposed to work differently if a URL has query parameters than if it does not, so treating them as the same is misleading the developer.

Monday, July 11, 2011

I was wondering if the term "guys" is becoming more gender-neutral over time. Have you ever referred to a group including some women as "guys"? I've used the term to refer to groups made up entirely of women, but only in the second-person plural sense in casual or sporty situations. E.g. I'd say "Are you guys ready" to a group of women I was about to go running with. I wouldn't say "I'm going running with the guys from work" if it was women from work because that would imply men. And I'd never ever use the "guy" for a woman or girl, in first, second or third person singular context. I might in a stretch say "I'm just one of the guys", but that's plural first person. Very strange but wiktionary seems to document this as common.

Even stranger, however, is the derivation of the word "guy". From a proper name to a derogatory term to a generic term. I found this on Online Etymology, but I'm going to reword the explanation in chronological order with some of the inferences filled in, because I had to read their explanation three times for it to make sense.

I wonder if there's a term for when a derogatory word becomes unobjectionable, and whether this usually happens by being appropriated (the people the term refers to use the word proudly) or just by being watered down.

Even stranger, however, is the derivation of the word "guy". From a proper name to a derogatory term to a generic term. I found this on Online Etymology, but I'm going to reword the explanation in chronological order with some of the inferences filled in, because I had to read their explanation three times for it to make sense.

- Guy Fawkes got his name from Old German word for "wood" or "warrior", or possibly Welsh for "lively" or French for "guide" -- but it was a standard boy's name at the time at any rate.

- Guy Fawkes planned the failed Gunpowder Plot to blow up the House of Lords.

- Thereafter, Guy Fawkes Day was celebrated with fireworks and bonfires, and an effigy of Guy Fawkes paraded through the streets being set before fire. The effigy would have been a straw man wearing cast-off clothing.

- The name Guy would have been so associated with this effigy, that calling somebody a "guy" must have brought to mind a badly-dressed scarecrow figure at that time.

- The term became more generic, from meaning "badly-dressed fellow" to meaning "ordinary man", over the course of just a generation.

I wonder if there's a term for when a derogatory word becomes unobjectionable, and whether this usually happens by being appropriated (the people the term refers to use the word proudly) or just by being watered down.

Thursday, July 07, 2011

I learned about Medium Maximization behavior the other day and thought about how Scrum works. It's an interesting lens.

When people engage in medium maximization, they maximize a proxy for a real goal, rather than maximizing progress towards the real goal. The proxy or medium could be points on an exam as a proxy for understanding a topic, or air miles as a proxy for plane tickets. People do odd things for air miles that they wouldn't do for an equivalent direct reward. More details at http://faculty.chicagobooth.edu/christopher.hsee/vita/Papers/MediumMaximization.pdf - there are quite a few interesting side effects involved.

Applying to scrum

Scrum assigns story points to small accomplishable goals intended to eventually combine to reach a finished project. Since story points are not the same as the goal, story points are a medium, and we should expect participants to put more weight on accomplishing story points than on finishing the project, changing their behavior compared to when story points are not used.

I fully believe this behavior works as much for the managers and stakeholders in Scrum as for the engineers. In fact it might be more so: the only thing managers can measure is story points, not actual useful code, so managers are probably more satisfied by story point burndown than engineers are. Engineers have more visibility into what's being achieved towards a completed project, so that can have more influence on their choices, but I'm quite sure engineers are influenced too.

Predictions

A hypothesis like this can be tested by making predictions that can be tested and measured. I don't propose to actually study this myself, but making predictions and finding things that can be measured is fun.

In the studies done by Christopher Hsee and co-authors, participants had to accomplish tasks to get rewards. In the control set, the participants contemplated doing more or less work for different rewards. When a medium was introduced, and more points were disproportionally offered for more work, participants did much more work on average, even though the rewards that the points could be exchanged for were the same. Applying this to scrum, we should find that scrum participants do more work to burn down more story points, especially if the scale is non-linear. Imagine that management could somehow set the story points such that doing 40 hours of work accomplished 40 story points, but 50 hours of work accomplished 75 story points. Participants would likely work more, and this can probably be measured.

Another prediction comes from the studies that show that the behavior of maximizing the proxy points continues even if the primary reinforcer is removed. In software development, a team might continue working on story points even if the product plan had been cancelled, unless they were told to stop. This sounds unlikely and wrong, but imagine instead a project is rumoured to be canceled soon due to a possible merger. A manager might prefer their team make solid progress on story points even with that rumour flying around. If development teams show less loss of morale and effort when such rumours are flying around when using scrum, than not, this would be good evidence that the teams were maximizing story point burndown rather than project release progress.

The last prediction is based on the results of applying a linear medium -- for each chunk of work you get the same number of points -- to a non-linear reward. In one study, participants had a reward with decreasing rates of return offered. When a linear medium was offered, but the exchange rate for points to reward had the same decreasing rate of return, participants did much more work. In software engineering it's commonly observed that the last 20% of the work takes the last 80% of the time, so software projects are arguably non-linear in the same way the rewards in this study were. If story points offer a linear medium with respect to effort -- which in classic scrum they do -- we should see engineers happy to do more work even as the project gets into its depressing cleanup and bugfix phase. (See note below)

Joking Suggestions

(I'm only playing with this lens, not suggesting real changes to scrum processes. Much of the focus on Medium Maximization is on figuring out how to get the most effort out of participants. If the goal of scrum is to get more effort out of people then Medium Maximization is very relevant, but if the goal is agility, job satisfaction, schedule predictability, or product quality, it might be less relevant. )

If the goal of scrum is to maximize engineering effort, then Medium Maximization studies do suggest that scrum teams should *earn* story points rather than burning them down. Scrum tracking tools should award points to the whole team (working for the team is definitely stronger than working individual) and display the current point total prominently. The tools might display both the overall point accumulation, and the current scrum/sprint or rolling total of last X days.

Calibrating the way story points scale is very important. The allocation of story points should definitely not show a decreasing margin of return. Very large tasks should never have insufficient story points, else work stops earlier. If we assume that story points are still assigned by engineering estimates, we can still use processes to influence this:

Rather than write the above sections with lots of caveats, I saved my own criticisms for this section.

When people engage in medium maximization, they maximize a proxy for a real goal, rather than maximizing progress towards the real goal. The proxy or medium could be points on an exam as a proxy for understanding a topic, or air miles as a proxy for plane tickets. People do odd things for air miles that they wouldn't do for an equivalent direct reward. More details at http://faculty.chicagobooth.edu/christopher.hsee/vita/Papers/MediumMaximization.pdf - there are quite a few interesting side effects involved.

Applying to scrum

Scrum assigns story points to small accomplishable goals intended to eventually combine to reach a finished project. Since story points are not the same as the goal, story points are a medium, and we should expect participants to put more weight on accomplishing story points than on finishing the project, changing their behavior compared to when story points are not used.

I fully believe this behavior works as much for the managers and stakeholders in Scrum as for the engineers. In fact it might be more so: the only thing managers can measure is story points, not actual useful code, so managers are probably more satisfied by story point burndown than engineers are. Engineers have more visibility into what's being achieved towards a completed project, so that can have more influence on their choices, but I'm quite sure engineers are influenced too.

Predictions

A hypothesis like this can be tested by making predictions that can be tested and measured. I don't propose to actually study this myself, but making predictions and finding things that can be measured is fun.

In the studies done by Christopher Hsee and co-authors, participants had to accomplish tasks to get rewards. In the control set, the participants contemplated doing more or less work for different rewards. When a medium was introduced, and more points were disproportionally offered for more work, participants did much more work on average, even though the rewards that the points could be exchanged for were the same. Applying this to scrum, we should find that scrum participants do more work to burn down more story points, especially if the scale is non-linear. Imagine that management could somehow set the story points such that doing 40 hours of work accomplished 40 story points, but 50 hours of work accomplished 75 story points. Participants would likely work more, and this can probably be measured.

Another prediction comes from the studies that show that the behavior of maximizing the proxy points continues even if the primary reinforcer is removed. In software development, a team might continue working on story points even if the product plan had been cancelled, unless they were told to stop. This sounds unlikely and wrong, but imagine instead a project is rumoured to be canceled soon due to a possible merger. A manager might prefer their team make solid progress on story points even with that rumour flying around. If development teams show less loss of morale and effort when such rumours are flying around when using scrum, than not, this would be good evidence that the teams were maximizing story point burndown rather than project release progress.

The last prediction is based on the results of applying a linear medium -- for each chunk of work you get the same number of points -- to a non-linear reward. In one study, participants had a reward with decreasing rates of return offered. When a linear medium was offered, but the exchange rate for points to reward had the same decreasing rate of return, participants did much more work. In software engineering it's commonly observed that the last 20% of the work takes the last 80% of the time, so software projects are arguably non-linear in the same way the rewards in this study were. If story points offer a linear medium with respect to effort -- which in classic scrum they do -- we should see engineers happy to do more work even as the project gets into its depressing cleanup and bugfix phase. (See note below)

Joking Suggestions

(I'm only playing with this lens, not suggesting real changes to scrum processes. Much of the focus on Medium Maximization is on figuring out how to get the most effort out of participants. If the goal of scrum is to get more effort out of people then Medium Maximization is very relevant, but if the goal is agility, job satisfaction, schedule predictability, or product quality, it might be less relevant. )

If the goal of scrum is to maximize engineering effort, then Medium Maximization studies do suggest that scrum teams should *earn* story points rather than burning them down. Scrum tracking tools should award points to the whole team (working for the team is definitely stronger than working individual) and display the current point total prominently. The tools might display both the overall point accumulation, and the current scrum/sprint or rolling total of last X days.

Calibrating the way story points scale is very important. The allocation of story points should definitely not show a decreasing margin of return. Very large tasks should never have insufficient story points, else work stops earlier. If we assume that story points are still assigned by engineering estimates, we can still use processes to influence this:

- Since we know people underestimate large chunks of work worse than they estimate small chunks of work, we should avoid having large chunks of work. Scrum often does this already by having a ceiling on story points. Some scrum practitioners assign no more than 5 story points: anything greater requires them to break down the story. That might not always have the intended effect -- in a meeting that's already taking too much time from too many people, the team might estimate the story at the maximum value rather than immediately have to reconsider the whole definition and breakdown of the story.

- Some types of engineering effort are often overlooked -- merging code branches, testing, deploying -- which is bad, because fewer story points are estimated for the last bit of effort than that effort truly warranted. In order to avoid that trap, we could always make those chores explicit and have estimates based on past merges rather than let engineers re-estimate too optimistically. (Yes, engineers estimate the same type of task over-optimistically, over and over and over again). Alternatively, a team might add a minimum of X story points to any story that required merging, testing or deployment, and again that X value would be based on real history.

- We might have the engineers give all their estimates for a story and always pick the largest estimate.

Rather than write the above sections with lots of caveats, I saved my own criticisms for this section.

- I stated that using story points which estimate effort detaches work from the real goal of adding functionality to a software project. But I neglected to mention that part of scrum is tying story points to demos of releasable functionality. That presumably helps keep story points better moored to real progress.

- Scrum and assigning story points helps break down big projects. Breaking down big projects into small tasks and tracking those tasks is nearly ubiquitously practiced in software engineering, probably because it's a good idea. Enforcing a good idea through well-rehearsed process is probably a good idea too.

- Medium maximization is easier to measure if your goals and rewards are clear. In software engineering, the goals are often unclear: product designers don't know exactly how everything ought to work until they can start to see it work, and then make changes. Features get cut due to lack of time. So detaching work from shipping a "complete product" might be a *good* thing -- it stops engineers from doing work on features that might get cancelled next week, and stops them from putting effort into scalability or robustness or abstractions that might not be needed. And if you don't know your goals at all, then scrum at least keeps people from yelling at each other over an apparent lack of progress vs lack of specifications.

- On the OTHER other hand, I imagine medium maximization is stronger in the absence of clear goals and clear relationships between the medium and the goal. If you don't know if you plan to go to Vancouver or to London next year, and if the airline can always change how many points gets you a ticket to each destination, then you can't plan exactly how many air miles you're going to need and how to get those air miles at least cost. Unclear medium/goal relationships will make people try to accumulate more of the medium than they estimate they might need, in order not to fall short. When engineers really don't know where the project is going, scrum will at least keep them resolving story points, and thus preserve the appearance of forward motion.

Sunday, March 13, 2011

A few weeks ago, I took a class with Kathy Zimmerman at Stitches West. Kathy designs beautiful sweaters, and cabling is definitely her signature design element. She's also the author (star?) of an instructional DVD on cabling, and owns a yarn store in Ligonier PA.

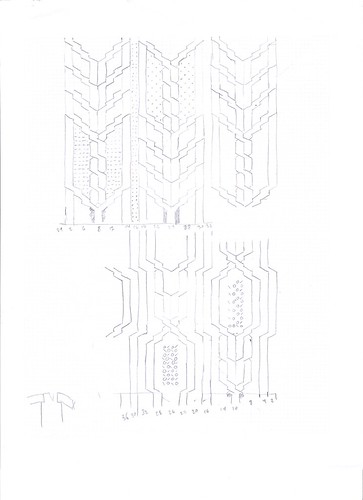

Kathy showed off some of her sweaters, which was a class unto itself on proper finishing techniques. She introduced cabling charting, which I already love, and got us started customizing charts. I was inspired by a couple of her cables and immediately started sketching freehand, then translated into this:

Then we spent the rest of the class swatching our designs. I knit the bottom chart of of handspun three-ply from Frank the sheep

and the top one out of white worsted Plymouth Yarn Encore, which shows off cables nicely.

We also sketched out a plan for an entire sweater based on the brown sample! I don't know yet if that's what I'll make from that yarn when I'm done spinning it, but possibly. I'll need to check how much yardage I have when I'm done.

Thursday, March 03, 2011

Jodi Green posted a link to TVO Archives. That is, the archives of public television in Ontario -- a personal wayback machine. I found "The Polka-dot Door", one episode, but more than enough to remind me of watching that show when I was six. Yikes. Today that show seems surreal and creepy.

Some of the interviews and forum shows are interesting to me now. There is an interview with Margaret Atwood at age 36 (she says "I'm old..."), where she comments a lot on how she is perceived and received by the public.

Some of the interviews and forum shows are interesting to me now. There is an interview with Margaret Atwood at age 36 (she says "I'm old..."), where she comments a lot on how she is perceived and received by the public.

- "If they're afraid of successful women, you're viewed as a witch. If they view you as a mother figure, they want you to solve their problems."

- "I was talking about economics and politics, and we all know that girls aren't supposed to think about those things, much less talk about them."

Twenty years later interview on the publication of Oryx and Crake, she talks more about literature and politics than on perceptions of herself:

- "You do get these ideas for books that come more or less complete, you just work them out."

- "You can't tell authors what they need to do." on whether authors should be told to discuss politics

- "America is our big neighbour. And if they go down, we go down. So of course we worry. If a person blows you up on some street or other, he isn't going to see your teeny-weeny maple leaf."

- "I got tired of people saying, why do you always write about women." (on Jimmy, main character of Oryx and Crake)

Also an interview with Mordechai Richler, 1994, about his book "This Year on Jerusalem", where he talks about his changing opinions on Zionism from 1943 through to the date of the interview.

- "I'm saying the Israeli's invoke the dead for mean political purposes."

- "I don't think most Israelis want to be on the West Bank as an army of occupation fighting Arab kids. It's very embarrassing."

And more cool stuff, like a discussion of the letters between Pierre Trudeau and Marshal McLuhan, comparing them to a Philosopher King and Court Philosopher, or a collation of opinions on selling movies, with Sydney Pollack, Robert Altman, John Milius and Roger Corman.

Saturday, February 26, 2011

Apple, I am incredibly frustrated.

I used to have an iPhoto plugin that published photos to Flickr. When I last updated iPhoto, I was initially happy to see that iPhoto seemed to have the same functionality built-in. I created an album that shared photos to Flickr and used it for a few months, dragging new photos in when I wanted them uploaded. Then I needed to move stuff to a new laptop so I went and archived years-old photos and deleted iPhoto albums I didn't need.

Two weeks later, I find missing photos in my Flickr photo stream, and broken links on Ravelry. Slowly I piece it together: when I cleaned out iPhoto on the old laptop, it silently deleted Flickr photos. Gone are the uploaded versions, titles, tags, descriptions I wrote, comments other people left, and counts of views. I can recover the photos themselves but I can't recover metadata. Most difficult to fix are broken links on other sites.

How could the designers have possibly thought it would be a good feature to silently delete published photos that have metadata of their own? Do the words "Share" and "Publish" not imply putting the photos out there? I tried this again and the words "Synch" and "delete" are not in the iPhoto UI -- the fact that I granted iPhoto permission to delete photos does not imply that deleting an album will delete them online. After all, deleting an album in iPhoto does not delete the photo in iPhoto, so the UI has trained me to think of albums as selections of photos, not containers of photos.

Apple, you had the chance to fix this. When I go looking for support for this, I see posts from obviously distressed and surprised users about thousands of photos deleted, and workarounds posted in response. Surely this allowed you to fix the problem within the last year? You could have issued an update to Flickr with the feature "Saves you from unknowingly destroying personal information online", but you did not. I rather expect this kind of behavior from you Apple, your pride in infallible UI design is well-known, but that does not stop me from being disappointed.

Flickr, I'm disappointed in you too. I know this is not your fault, but many postings in your forums have asked you to disable iPhoto's permission to delete. You could have done that or made mass-deletes "soft-deletes" or asked the flickr account holder to confirm the loss of metadata. You, too, had the chance to save users from losing image metadata.

I used to have an iPhoto plugin that published photos to Flickr. When I last updated iPhoto, I was initially happy to see that iPhoto seemed to have the same functionality built-in. I created an album that shared photos to Flickr and used it for a few months, dragging new photos in when I wanted them uploaded. Then I needed to move stuff to a new laptop so I went and archived years-old photos and deleted iPhoto albums I didn't need.

Two weeks later, I find missing photos in my Flickr photo stream, and broken links on Ravelry. Slowly I piece it together: when I cleaned out iPhoto on the old laptop, it silently deleted Flickr photos. Gone are the uploaded versions, titles, tags, descriptions I wrote, comments other people left, and counts of views. I can recover the photos themselves but I can't recover metadata. Most difficult to fix are broken links on other sites.

How could the designers have possibly thought it would be a good feature to silently delete published photos that have metadata of their own? Do the words "Share" and "Publish" not imply putting the photos out there? I tried this again and the words "Synch" and "delete" are not in the iPhoto UI -- the fact that I granted iPhoto permission to delete photos does not imply that deleting an album will delete them online. After all, deleting an album in iPhoto does not delete the photo in iPhoto, so the UI has trained me to think of albums as selections of photos, not containers of photos.

Apple, you had the chance to fix this. When I go looking for support for this, I see posts from obviously distressed and surprised users about thousands of photos deleted, and workarounds posted in response. Surely this allowed you to fix the problem within the last year? You could have issued an update to Flickr with the feature "Saves you from unknowingly destroying personal information online", but you did not. I rather expect this kind of behavior from you Apple, your pride in infallible UI design is well-known, but that does not stop me from being disappointed.

Flickr, I'm disappointed in you too. I know this is not your fault, but many postings in your forums have asked you to disable iPhoto's permission to delete. You could have done that or made mass-deletes "soft-deletes" or asked the flickr account holder to confirm the loss of metadata. You, too, had the chance to save users from losing image metadata.

Saturday, February 12, 2011

I've noticed an interesting link between art and software, specifically a parallel to the Scrum process and the existence of Certified Scrum Masters: there are ZenTangles with a specific process for creating art or doodles, and Certified ZenTangle Teachers.

Besides the terminology, both ideas are about regulating and confining what are normally considered open-ended, unbounded problem areas. In ZenTangles, there are arbitrary rules: how many strings to start your ZenTangle with, precisely what fine-tipped pen to use, and the most emphasized restriction, the size of paper is 3.5" by 3.5". Scrum often fetishizes details like planning poker, estimate roshambo, and post-it notes (full disclosure: I've participated deeply in the fetishization of stickie planning).

Then, both approaches have the practitioner routinely break down work into small manageable units and focus on completing units. Breaking down even further, Scrum has recipes for small parts of scrum (like this recipe for a retrospective) and ZenTangles have recipes for line and space doodles. Finally, both approaches celebrate the fact that the "finished" output (a sprint, interval or doodle) is small and done quickly.

Are Zentangles art? Does Scrum produce good design? When do you follow the limitations and when do you depart from them?

Updated: here's my first zentangle, doodled while I was writing this post! Not on 3.5 square paper, nor with the right pen, nor did I follow the "string" process first -- I was always bad at staying within creative bounds.

Besides the terminology, both ideas are about regulating and confining what are normally considered open-ended, unbounded problem areas. In ZenTangles, there are arbitrary rules: how many strings to start your ZenTangle with, precisely what fine-tipped pen to use, and the most emphasized restriction, the size of paper is 3.5" by 3.5". Scrum often fetishizes details like planning poker, estimate roshambo, and post-it notes (full disclosure: I've participated deeply in the fetishization of stickie planning).

Then, both approaches have the practitioner routinely break down work into small manageable units and focus on completing units. Breaking down even further, Scrum has recipes for small parts of scrum (like this recipe for a retrospective) and ZenTangles have recipes for line and space doodles. Finally, both approaches celebrate the fact that the "finished" output (a sprint, interval or doodle) is small and done quickly.

Are Zentangles art? Does Scrum produce good design? When do you follow the limitations and when do you depart from them?

Updated: here's my first zentangle, doodled while I was writing this post! Not on 3.5 square paper, nor with the right pen, nor did I follow the "string" process first -- I was always bad at staying within creative bounds.

Thursday, January 13, 2011

I downloaded and played Civ V last night. I watched through the high-quality animated movie intro, and noticed how carefully the old man, the hut, and the young man were culturally mixed. Their skin was brown, but could have been from suntans and wear/age. The old man's hair was grey, the young man's hair was hidden. The clothing (including a turban-derived headgear on the young man) was carefully invented and mixed inspirations from different cultures, as far as I could tell. I am convinced this introduction was designed to be culturally neutral, as if the old man and his son could have been the early leaders of any of the cultures used in Civilization.

So it finally struck me: it's all male. They couldn't make it gender-neutral.

The original Civilization had 13 male leaders and one female (Elisabeth I was the default leader of the English, though you could type in "Henry VIII" or "Tony Blair" or "Rowan Atkinson" if you felt like it.) I didn't really notice at the time. Civ II had a female and a male leader for each civilization, which was in-your-face obvious gender equality. It meant that some famous women got exposure (like Catherine for the Russians) but others were pretty unknown (I'm thinking Nazca for the Aztecs, and Ishtar for the Babylonians -- and can you even guess which Civ had "Bortei" as its female leader?)

This kind of public, forced gender equality was what finally made me notice once in a while what role females were expected to play in video games. Like being rescued in Super Mario, rather than being the rescuer. Back when I was just a teenage gamer, I didn't ever notice. I was oblivious. It still can take me a while before I notice, like I spent all that time noticing the careful cultural neutrality in Civ V before it finally hit me there was no attempt at gender neutrality in that introduction.

My point? Given how blunt and ham-handed the stereotyped roles for females in video games are, and how long it took me to start noticing, even though I am a woman, is an indication of how poor I am (and I know most people are) at even noticing gender bias.

So it finally struck me: it's all male. They couldn't make it gender-neutral.

The original Civilization had 13 male leaders and one female (Elisabeth I was the default leader of the English, though you could type in "Henry VIII" or "Tony Blair" or "Rowan Atkinson" if you felt like it.) I didn't really notice at the time. Civ II had a female and a male leader for each civilization, which was in-your-face obvious gender equality. It meant that some famous women got exposure (like Catherine for the Russians) but others were pretty unknown (I'm thinking Nazca for the Aztecs, and Ishtar for the Babylonians -- and can you even guess which Civ had "Bortei" as its female leader?)

This kind of public, forced gender equality was what finally made me notice once in a while what role females were expected to play in video games. Like being rescued in Super Mario, rather than being the rescuer. Back when I was just a teenage gamer, I didn't ever notice. I was oblivious. It still can take me a while before I notice, like I spent all that time noticing the careful cultural neutrality in Civ V before it finally hit me there was no attempt at gender neutrality in that introduction.

My point? Given how blunt and ham-handed the stereotyped roles for females in video games are, and how long it took me to start noticing, even though I am a woman, is an indication of how poor I am (and I know most people are) at even noticing gender bias.

Subscribe to:

Posts (Atom)